Chapter 3 Test Fairness

3.1 Introduction

The 2014 Standards for Educational and Psychological Testing (AERA, APA, & NCME, 2014, p. 49) indicate that fairness to all individuals in the intended population is an overriding and fundamental validity concern. “The central idea of fairness in testing is to identify and remove construct-irrelevant barriers to maximal performance for any examinee (AERA, APA, & NCME, 2014, p. 63).

The term fairness has no single technical meaning and is used in many ways in public discourse. Issues that influence test fairness include, but are not limited to:

- the definition of the construct—the knowledge, skills, and abilities—that’s intended to be measured;

- the development of items and tasks that are explicitly designed to assess the construct that is the target of measurement;

- the delivery of items and tasks in a manner that enables students to demonstrate their achievement of the construct; and

- how student responses to items and tasks are captured and scored.

Although the content in this chapter addresses these issues, the reader may be directed to other, or additional, resources that provide evidence for test fairness. Most elements in the Smarter Balanced approach to test fairness apply to the entire assessment system, including the summative and interim assessments.

The interim assessments use the same content specifications as the summative assessments. The knowledge, skills, and abilities assessed by Smarter Balanced tests and their relationship to the Common Core State Standards (CCSS) are described in the Content Specifications for the summative assessment (see the Content Explorer). The major constructs within ELA/literacy and mathematics for which evidence of student achievement is gathered are ‘claims’, and are the basis for reporting student performance.

Students have access to the same accessibility resources on the interim assessments that are available on the summative assessments. These resources include universal tools, designated supports, and accommodations. These resources are described in the Smarter Balanced Bias and Sensitivity Guidelines as well as the current chapter. It is important to note that the inferences that may be made from test results should in no way be influenced by the use or nonuse of accessibility resources. In contrast, the term “modifications” typically refers to differences from standard conditions that may affect the meaning of test scores and should therefore be reported, along with test results.

3.1.1 Attention to Bias and Sensitivity in Test Development

According to the Standards, bias is “construct underrepresentation or construct-irrelevant components of test scores that differentially affect the performance of different groups of test takers and consequently the reliability/precision and validity of interpretations and uses of their test scores” (AERA, APA, & NCME, 2014, p. 216). “Sensitivity” refers to an awareness of the need to avoid explicit bias in assessment. In common usage, reviews of tests for bias and sensitivity help ensure that test items and stimuli are fair for various groups of test takers (AERA, APA, & NCME, 2014, p. 64).

The goal of fairness in assessment is to assure that test materials are as free as possible from unnecessary barriers to the success of diverse groups of students. Smarter Balanced developed the Bias and Sensitivity Guidelines to help ensure that the assessments are fair for all groups of test takers, despite differences in characteristics including, but not limited to, disability status, ethnic group, gender, regional background, native language, race, religion, sexual orientation, and socioeconomic status. Unnecessary barriers can be reduced by following some fundamental rules:

- measuring only knowledge or skills that are relevant to the intended construct;

- not angering, offending, upsetting, or otherwise distracting test takers; and

- treating all groups of people with appropriate respect in test materials.

These rules help ensure that the test content is fair for test takers and acceptable to the many stakeholders and constituent groups within Smarter Balanced member organizations. Fairness must be considered in all phases of test development and use. Smarter Balanced strongly relied on the Bias and Sensitivity Guidelines in the development and design phases of the Smarter Balanced assessments, including the training of item writers, item writing, and review. Smarter Balanced’s focus and attention on bias and sensitivity comply with Chapter 3 of the Standards, which states: “Test developers are responsible for developing tests that measure the intended construct and for minimizing the potential for tests being affected by construct-irrelevant characteristics such as linguistic, communicative, cognitive, cultural, physical, or other characteristics” (AERA, APA, & NCME, 2014, p. 64).

Pertaining to the 2021-22 administration year specifically, Smarter Balanced reviewed items on interims to determine whether items or stimuli should be removed due to emergent sensitivity concerns related to the pandemic and personal safety. External reviewers assisted with the review in some cases. A total of eleven interim assessments were updated to remove or replace assessment content that was deemed potentially sensitive.

3.2 The Smarter Balanced Accessibility and Accommodations Framework

Smarter Balanced has built a framework of accessibility for all students, including English Learners (ELs), students with disabilities, and ELs with disabilities, but not limited to those groups. Three resources—the Smarter Balanced Item and Task Specifications Bibliography (Smarter Balanced, 2016b), the Smarter Balanced Accessibility and Accommodations Framework (Smarter Balanced, 2016a), and the Smarter Balanced Bias and Sensitivity Guidelines—are used to guide the development of the assessments, items, and tasks to ensure that they accurately measure the targeted constructs. Recognizing the diverse characteristics and needs of students who participate in the Smarter Balanced assessments, the states worked together through the Smarter Balanced test administration and student access workgroup to develop the Accessibility and Accommodations Framework (Smarter Balanced, 2016a) that guided the Consortium as it worked to reach agreement on the specific universal tools, designated supports, and accommodations available for the assessments. This work also incorporated research and practical lessons learned through universal design, accessibility tools, and accommodations (Thompson et al., 2002).

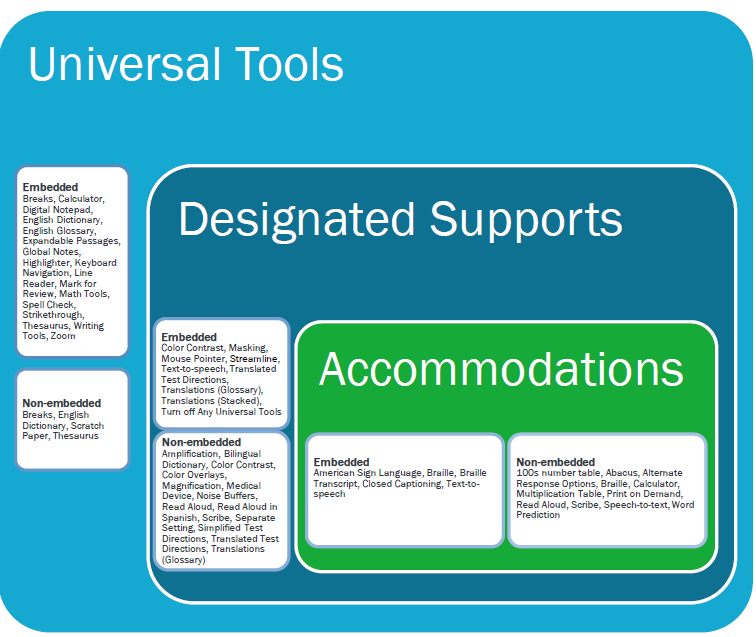

In the process of developing its next-generation assessments to measure students’ knowledge and skills as they progress toward college and career readiness, Smarter Balanced recognized that the validity of assessment results depends on each student having appropriate universal tools, designated supports, and accommodations when needed, based on the constructs being measured by the assessment. The Smarter Balanced assessment system uses technology intended to deliver assessments that meet the needs of individual students to help ensure that the test is fair. Online/electronic delivery of the assessments helps ensure that students are administered a test that can meet their unique needs for accessibility while still measuring the same construct. During the administration of tests, items and tasks are delivered using a variety of accessibility resources and accommodations that can be administered to students automatically based on their individual profiles. Accessibility resources include, but are not limited to, foreground and background color flexibility, tactile presentation of content (e.g., braille), and translated presentation of assessment content in signed form and selected spoken languages. The complete list of universal tools, designated supports, and accommodations with a description of each and recommendations for use can be found in the Usability, Accessibility, and Accommodations Guidelines. The conceptual model underlying the guidelines is shown in Figure 3.1.

Figure 3.1: Conceptual Model Underlying The Smarter Balanced Usability, Accessibility, And Accommodations Guidelines

Smarter Balanced adopted a common set of universal tools, designated supports, and accommodations. As a starting point, Smarter Balanced surveyed all members to determine their past practices. From these data, Smarter Balanced worked with members and used a deliberative analysis strategy as described in Accommodations for English Language Learners and Students with Disabilities: A Research-Based Decision Algorithm (Abedi & Ewers, 2013) to determine which accessibility resources should be made available during the assessment and whether access to these resources should be moderated by an adult. As a result, some accessibility resources that states traditionally had identified as accommodations were instead embedded in the test or otherwise incorporated into the Smarter Balanced assessments as universal tools or designated supports. Other resources were not incorporated into the assessment because access to these resources was not grounded in research or was determined to interfere with the construct measured. The final list of accessibility resources and the recommended use of the resources can be found in the Usability, Accessibility, and Accommodations Guidelines.

A fundamental goal was to design an assessment that is accessible for all students, regardless of English language proficiency, disability, or other individual circumstances. The three components (universal tools, designed supports, and accommodations) of the Accessibility and Accommodations Framework are designed to meet that need. The intent was to:

- Design and develop items and tasks to ensure that all students have access to the items and tasks designed to measure the targeted constructs. In addition, deliver items, tasks, and the collection of student responses in a way that maximizes validity for each student.

- Adopt the conceptual model embodied in the Accessibility and Accommodations Framework that describes accessibility resources of digitally delivered items/tasks and acknowledges the need for some adult-monitored accommodations. The model also characterizes accessibility resources as a continuum from those available to all students ranging to ones that are implemented under adult supervision available only to students with a documented need.

- Implement the use of an individualized and systematic needs profile for students, or Individual Student Assessment Accessibility Profile (ISAAP), that promotes the provision of appropriate access and tools for each student. Smarter created an ISAAP process that helps education teams systematically select the most appropriate accessibility resources for each student and the ISAAP tool, which helps teams note the accessibility resources chosen.

The conceptual framework that serves as the basis underlying the Usability, Accessibility, and Accommodations Guidelines is shown in Figure 3.1. This figure portrays several aspects of the Smarter Balanced assessment resources—universal tools (available for all students), designated supports (available when indicated by an adult or team), and accommodations (as documented in an Individualized Education Program or 504 plan). It also displays the additive and sequentially inclusive nature of these three aspects. Universal tools are available to all students, including those receiving designated supports and those receiving accommodations. Designated supports are available only to students who have been identified as needing these resources (as well as those students for whom the need is documented). Accommodations are available only to those students with documentation of the need through a formal plan (e.g., IEP, 504). Those students also may access designated supports and universal tools.

A universal tool or a designated support may also be an accommodation, depending on the content target and grade. This approach is consistent with the emphasis that Smarter Balanced has placed on the validity of assessment results coupled with access. Universal tools, designated supports, and accommodations are all intended to yield valid scores. Also shown in Figure 3.1 are the universal tools, designated supports, and accommodations for each category of accessibility resources. Accessibility resources may be embedded or non-embedded; the distinction is based on how the resource is provided (either within digitally delivered components of the test or outside of the test delivery system).

The specific universal tools, designated supports, and accommodations approved by Smarter Balanced may change in the future if additional tools, supports, or accommodations are identified for the assessment based on member experience and research findings. The Consortium has established a standing committee, including representatives from governing members that review suggested additional universal tools, designated supports, and accommodations to determine if changes are warranted. Proposed changes to the list of universal tools, designated supports, and accommodations are brought to governing members for review, input, and vote for approval.

3.3 Meeting the Needs of Traditionally Underrepresented Populations

A policy decision was made during the development of Smarter Balanced assessments to make accessibility resources available to all students based on need rather than eligibility status or demographic group categorical designation. This decision reflects a belief among Consortium states that unnecessarily restricting access to accessibility resources threatens the validity of the assessment results and places students under undue stress and frustration. Additionally, accommodations are available for students who qualify for them. The Consortium utilizes a needs-based approach to providing accessibility resources. A description as to how this benefits ELs, students with disabilities, and ELs with disabilities is presented here.

3.3.1 Students Who Are ELs

Students who are ELs have needs that are different from students with disabilities, including language-related disabilities. The needs of ELs are not the result of a language-related disability, but instead are specific to the student’s current level of English language proficiency. The needs of ELs are diverse and are influenced by the interaction of several factors, including their current level of English language proficiency, their prior exposure to academic content and language in their native language, the languages to which they are exposed outside of school, the length of time they have participated in the U.S. education system, and the language(s) in which academic content is presented in the classroom. Given the unique background and needs of each student, the conceptual framework is designed to focus on students as individuals and to provide several accessibility resources that can be combined in a variety of ways. Some of these digital tools, such as using a highlighter to highlight key information and an audio presentation of test navigation features, are available to all students, including those at various stages of English language development. Other tools, such as the audio presentation of items and glossary definitions in English, may also be assigned to any student, including those at various stages of English language development. Still, other tools, such as embedded glossaries that present translations of construct irrelevant terms, are intended for those students whose prior language experiences would allow them to benefit from translations into another language. Collectively, the conceptual framework for usability, accessibility, and accommodations embraces a variety of accessibility resources that have been designed to meet the needs of students at various stages in their English language development.

3.3.2 Students with Disabilities

Federal law (Individuals with Disabilities Education Act, 2004) requires that students with disabilities and a documented need receive accommodations that address those needs and that they participate in assessments. The intent of the law is to ensure that all students have appropriate access to instructional materials and are held to the same high standards. When students are assessed, the law ensures that students receive appropriate accommodations during testing so they can appropriately demonstrate what they know and so their achievement is measured accurately.

The Accessibility and Accommodations Framework (Smarter Balanced, 2016a) addresses the needs of students with disabilities in three ways. First, it provides for the use of digital test items that are purposefully designed to contain multiple forms of the item, each developed to address a specific access need. By allowing the delivery of a given item to be tailored based on each student’s accommodation, the Framework fulfills the intent of federal accommodation legislation. Embedding universal accessibility digital tools, however, addresses only a portion of the access needs required by many students with disabilities. Second, by embedding accessibility resources in the digital test delivery system, additional access needs are met. This approach fulfills the intent of the law for many, but not all, students with disabilities by allowing the accessibility resources to be activated for students based on their needs. Third, by allowing for a wide variety of digital and locally provided accommodations (including physical arrangements), the Framework addresses a spectrum of accessibility resources appropriate for mathematics and ELA/literacy assessment. Collectively, the Framework adheres to federal regulations by allowing a combination of universal design principles, universal tools, designated supports, and accommodations to be embedded in a digital delivery system and assigned and provided based on individual student needs. Therefore, a student with a disability benefits from the system because they may be eligible to access resources from any of the three categories as necessary to create an assessment tailored to their individual need.

3.4 The Individual Student Assessment Accessibility Profile (ISAAP)

Typical practice frequently required schools and educators to document, a priori, the need for specific student accommodations and then to document the use of those accommodations after the assessment. For example, most programs require schools to document a student’s need for a large-print version of a test for delivery to the school. Following the test administration, the school documented (often by bubbling in information on an answer sheet) which of the accommodations, if any, a given student received, whether the student actually used the large-print form, and whether any other accommodations were provided.

Universal tools are available by default in the Smarter Balanced test delivery system. These tools can be deactivated if they create an unnecessary distraction for the student. Embedded designated supports and accommodations must be enabled by an informed educator or group of educators with the student when appropriate. To facilitate the decision-making process around individual student accessibility needs, Smarter Balanced has established an Individual Student Assessment Accessibility Profile (ISAAP) tool. The ISAAP tool is designed to facilitate selection of the universal tools, designated supports, and accommodations that match student access needs for the Smarter Balanced assessments, as supported by the Smarter Balanced Usability, Accessibility, and Accommodations Guidelines. Smarter Balanced recommends that the ISAAP tool be used in conjunction with the Smarter Balanced Usability, Accessibility, and Accommodations Guidelines and state regulations and policies related to assessment accessibility as a part of the ISAAP process. For students requiring one or more accessibility resources, schools are able to document this need prior to test administration. Furthermore, the ISAAP can include information about universal tools that may need to be eliminated for a given student. By documenting needs prior to test administration, a digital delivery system is able to activate the specified options when the student logs in to an assessment. In this way, the profile permits school-level personnel to focus on each individual student, documenting the accessibility resources required for valid assessment of that student in a way that is efficient to manage.

The conceptual framework shown in Figure 3.1 provides a structure that assists in identifying which accessibility resources should be made available for each student. In addition, the conceptual framework is designed to differentiate between universal tools available to all students and accessibility resources that must be assigned before the administration of the assessment. Consistent with recommendations from Shafer Willner & Rivera (2011); Thurlow et al. (2011); Fedorchak (2012); and Russell (2011), Smarter Balanced is encouraging school-level personnel to use a team approach to make decisions concerning each student’s ISAAP. Input from individuals with multiple perspectives, including the student, will likely result in appropriate decisions about the assignment of accessibility resources. Multiple perspectives will also avoid selecting too many accessibility resources for a student. The use of too many unneeded accessibility resources can be distracting to the student and decrease the student’s performance.

3.5 Usability, Accessibility, and Accommodations Guidelines

Smarter Balanced developed the Usability, Accessibility, and Accommodations Guidelines (UAAG, available at https://portal.smarterbalanced.org/library/usability-accessibility-and-accommodations-guidelines/) for its members to guide the selection and administration of universal tools, designated supports, and accommodations. All ICAs, IABs, and FIABs are fully accessible and offer all accessibility resources as appropriate by grade and content area, including ASL, braille, and Spanish. It is intended for school-level personnel and decision-making teams, particularly Individualized Education Program (IEP) teams, as they prepare for and implement the Smarter Balanced summative and interim assessments. The UAAG provides information for classroom teachers, English development educators, special education teachers, and related services personnel to select and administer universal tools, designated supports, and accommodations for those students who need them. The UAAG is also intended for assessment staff and administrators who oversee the decisions that are made in instruction and assessment. It emphasizes an individualized approach to the implementation of assessment practices for those students who have diverse needs and participate in large-scale assessments. This document focuses on universal tools, designated supports, and accommodations for the Smarter Balanced summative and interim assessments in ELA/literacy and mathematics. At the same time, it supports important instructional decisions about accessibility for students. It recognizes the critical connection between accessibility in instruction and accessibility during assessment.

3.6 Differential Item Functioning (DIF)

DIF analyses are used to identify items that have different measurement properties for students in different demographic groups who are matched on overall achievement. Information about DIF and the procedures for reviewing items flagged for DIF is a component of validity evidence associated with the internal properties of the test.

3.6.1 Method of Assessing DIF

Differential item functioning (DIF) analyses are performed on items using data gathered in field testing. In a DIF analysis, the performance on an item by two groups that are similar in achievement but differ demographically are compared. In general, the two groups are called the focal and reference groups. The focal group is usually a minority group (e.g., Hispanic or Latino), while the reference group is usually a contrasting majority group (e.g., White) or all students that are not part of the focal group demographic. Table 3.1 shows the focal and reference groups for the DIF analyses performed by Smarter Balanced.

| Group Type | Focal Group | Reference Group |

|---|---|---|

| Gender | Female | Male |

| Race/Ethnicity | Black/African American | White |

| Asian/Pacific Islander | ||

| Native American/Alaska Native | ||

| Hispanic/Latino | ||

| Special Populations | Limited English Proficient (LEP) | English Proficient |

| Individualized Education Program (IEP) | No IEP | |

| Title 1 (Economically disadvantaged) | Not Title 1 |

Items are classified into three DIF categories: “A” (negligible), “B” (slight or moderate), or “C” (moderate to large), according to the observed degree of DIF for a particular focal-reference comparison. The letters are also signed with positive values meaning that the focal group performs better on the item than the reference group and negative values indicating the reference group does better on the item than the focal group. For details on the method of computing DIF statistics and criteria for flagging items by their degree of DIF, please see the current Smarter Balanced summative technical report on the Smarter Balanced website. DIF analyses may not be carried out, or may not be capable of detecting DIF, if the sample size for either the reference group or the focal group is too small.

Items flagged for C-level DIF are subsequently reviewed by content experts and bias/sensitivity committees to determine the source and meaning of performance differences. An item flagged for C-level DIF may be measuring something different from the intended construct for one of the groups. However, it is important to recognize that DIF-flagged items might be related to actual differences in relevant knowledge and skills or may have been flagged due to chance variation in the DIF statistic (known as statistical type I error). As Cole & Zieky (2001) noted, “If the members of the measurement community currently agree on any aspect of fairness, it is that score differences alone are not proof of bias.”

3.7 DIF Analysis Results for Interim Assessment Items

Table 3.2 through Table 3.7 show the counts of items used on interim assessments that showed performance differences at the A, B, or C levels for each DIF comparison evaluated when the item was field tested. Category “N/A” indicates demographic comparisons for which sample sizes did not meet the minimum requirement for DIF testing. DIF tests for most items used on interim assessments indicated only negligible demographic-related performance differences.

Table 3.2 and Table 3.3 show the number of items flagged for all categories of DIF for ELA/literacy and mathematics in grades 3 to 11 for the ICAs.

Table 3.4 and Table 3.5 show the number of items flagged for all categories of DIF for ELA/literacy and mathematics in grades 3 to 8 and 11 for the IABs.

Table 3.6 and Table 3.7 show the number of items flagged for all categories of DIF for ELA/literacy and mathematics in grades 3 to 8 and 11 for the FIABs.

All items on the interim assessments underwent and passed bias and sensitivity reviews before they were even field tested. After DIF statistics were obtained for these items, qualified subject matter and bias sensitivity experts reviewed items classified into category C of DIF. Only items approved by a multi-disciplinary panel of content and sensitivity experts are eligible to be used on Smarter Balanced assessments.

| Grade | DIF Category | Female Male | Asian White | Black White | Hispanic White | Native American White | IEP Non-IEP | LEP Non-LEP | Title 1 Non-Title 1 |

|---|---|---|---|---|---|---|---|---|---|

| 3 | N/A | 0 | 1 | 1 | 0 | 15 | 0 | 0 | 0 |

| A | 40 | 38 | 38 | 39 | 25 | 39 | 38 | 40 | |

| B- | 0 | 0 | 1 | 1 | 0 | 0 | 1 | 0 | |

| B+ | 0 | 1 | 0 | 0 | 0 | 1 | 1 | 0 | |

| C- | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| C+ | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| 4 | N/A | 0 | 0 | 0 | 0 | 18 | 0 | 0 | 0 |

| A | 42 | 40 | 42 | 41 | 23 | 41 | 41 | 41 | |

| B- | 0 | 1 | 0 | 1 | 1 | 1 | 1 | 1 | |

| B+ | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | |

| C- | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| C+ | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| 5 | N/A | 0 | 1 | 0 | 0 | 20 | 0 | 0 | 0 |

| A | 42 | 37 | 38 | 39 | 19 | 41 | 38 | 42 | |

| B- | 0 | 4 | 4 | 2 | 2 | 1 | 4 | 0 | |

| B+ | 0 | 0 | 0 | 1 | 1 | 0 | 0 | 0 | |

| C- | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| C+ | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| 6 | N/A | 0 | 3 | 1 | 0 | 21 | 0 | 2 | 0 |

| A | 40 | 38 | 42 | 43 | 21 | 43 | 40 | 43 | |

| B- | 1 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | |

| B+ | 2 | 2 | 0 | 0 | 0 | 0 | 1 | 0 | |

| C- | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| C+ | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| 7 | N/A | 0 | 1 | 0 | 0 | 13 | 1 | 1 | 0 |

| A | 40 | 40 | 42 | 43 | 30 | 42 | 42 | 43 | |

| B- | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| B+ | 2 | 2 | 1 | 0 | 0 | 0 | 0 | 0 | |

| C- | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| C+ | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| 8 | N/A | 0 | 0 | 0 | 0 | 8 | 0 | 0 | 0 |

| A | 39 | 38 | 40 | 39 | 31 | 41 | 39 | 40 | |

| B- | 0 | 2 | 1 | 2 | 1 | 0 | 2 | 1 | |

| B+ | 1 | 1 | 0 | 0 | 1 | 0 | 0 | 0 | |

| C- | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| C+ | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| 11 | N/A | 0 | 4 | 4 | 1 | 33 | 4 | 9 | 0 |

| A | 39 | 34 | 36 | 38 | 8 | 35 | 32 | 40 | |

| B- | 1 | 2 | 0 | 1 | 0 | 2 | 0 | 1 | |

| B+ | 1 | 1 | 1 | 1 | 0 | 0 | 0 | 0 | |

| C- | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| C+ | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Grade | DIF Category | Female Male | Asian White | Black White | Hispanic White | Native American White | IEP Non-IEP | LEP Non-LEP | Title 1 Non-Title 1 |

|---|---|---|---|---|---|---|---|---|---|

| 3 | N/A | 0 | 0 | 0 | 0 | 30 | 0 | 0 | 0 |

| A | 37 | 34 | 34 | 35 | 7 | 37 | 36 | 37 | |

| B- | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | |

| B+ | 0 | 3 | 2 | 1 | 0 | 0 | 0 | 0 | |

| C- | 0 | 0 | 0 | 1 | 0 | 0 | 1 | 0 | |

| C+ | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| 4 | N/A | 0 | 1 | 0 | 0 | 6 | 0 | 0 | 0 |

| A | 35 | 33 | 35 | 36 | 28 | 36 | 36 | 36 | |

| B- | 0 | 1 | 0 | 0 | 2 | 0 | 0 | 0 | |

| B+ | 1 | 1 | 1 | 0 | 0 | 0 | 0 | 0 | |

| C- | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| C+ | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| 5 | N/A | 0 | 0 | 0 | 0 | 10 | 0 | 0 | 0 |

| A | 37 | 32 | 36 | 37 | 27 | 37 | 36 | 37 | |

| B- | 0 | 1 | 0 | 0 | 0 | 0 | 1 | 0 | |

| B+ | 0 | 4 | 1 | 0 | 0 | 0 | 0 | 0 | |

| C- | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| C+ | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| 6 | N/A | 0 | 0 | 0 | 0 | 35 | 0 | 0 | 0 |

| A | 36 | 36 | 36 | 36 | 1 | 36 | 36 | 36 | |

| B- | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| B+ | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| C- | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| C+ | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| 7 | N/A | 0 | 0 | 0 | 0 | 37 | 0 | 0 | 0 |

| A | 35 | 35 | 37 | 36 | 0 | 34 | 35 | 37 | |

| B- | 1 | 0 | 0 | 0 | 0 | 0 | 2 | 0 | |

| B+ | 1 | 2 | 0 | 1 | 0 | 3 | 0 | 0 | |

| C- | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| C+ | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| 8 | N/A | 0 | 2 | 0 | 0 | 36 | 0 | 0 | 0 |

| A | 37 | 29 | 36 | 35 | 1 | 36 | 36 | 37 | |

| B- | 0 | 3 | 0 | 2 | 0 | 0 | 0 | 0 | |

| B+ | 0 | 2 | 1 | 0 | 0 | 1 | 1 | 0 | |

| C- | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | |

| C+ | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| 11 | N/A | 0 | 3 | 1 | 0 | 38 | 2 | 3 | 0 |

| A | 36 | 31 | 35 | 38 | 0 | 34 | 34 | 38 | |

| B- | 1 | 1 | 1 | 0 | 0 | 0 | 1 | 0 | |

| B+ | 1 | 2 | 1 | 0 | 0 | 2 | 0 | 0 | |

| C- | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| C+ | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 |

| Grade | DIF Category | Female Male | Asian White | Black White | Hispanic White | Native American White | IEP Non-IEP | LEP Non-LEP | Title 1 Non-Title 1 |

|---|---|---|---|---|---|---|---|---|---|

| 3 | N/A | 0 | 11 | 7 | 0 | 45 | 5 | 0 | 0 |

| A | 70 | 60 | 63 | 73 | 29 | 65 | 71 | 74 | |

| B- | 0 | 1 | 2 | 1 | 0 | 0 | 2 | 0 | |

| B+ | 4 | 2 | 2 | 0 | 0 | 4 | 1 | 0 | |

| C- | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| C+ | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| 4 | N/A | 0 | 11 | 3 | 0 | 36 | 4 | 0 | 0 |

| A | 69 | 61 | 69 | 72 | 35 | 68 | 73 | 73 | |

| B- | 0 | 0 | 0 | 0 | 2 | 1 | 0 | 0 | |

| B+ | 2 | 1 | 1 | 1 | 0 | 0 | 0 | 0 | |

| C- | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| C+ | 2 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| 5 | N/A | 0 | 10 | 2 | 0 | 41 | 1 | 2 | 0 |

| A | 67 | 63 | 70 | 72 | 32 | 71 | 68 | 72 | |

| B- | 2 | 0 | 1 | 1 | 0 | 1 | 3 | 1 | |

| B+ | 4 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| C- | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| C+ | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| 6 | N/A | 0 | 6 | 1 | 1 | 48 | 1 | 1 | 0 |

| A | 66 | 59 | 67 | 67 | 23 | 67 | 65 | 69 | |

| B- | 2 | 3 | 0 | 2 | 0 | 1 | 4 | 2 | |

| B+ | 3 | 2 | 3 | 1 | 0 | 2 | 1 | 0 | |

| C- | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | |

| C+ | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| 7 | N/A | 0 | 12 | 6 | 2 | 49 | 5 | 8 | 0 |

| A | 70 | 61 | 67 | 71 | 26 | 67 | 67 | 75 | |

| B- | 2 | 1 | 0 | 1 | 0 | 2 | 0 | 0 | |

| B+ | 3 | 1 | 2 | 1 | 0 | 1 | 0 | 0 | |

| C- | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| C+ | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| 8 | N/A | 0 | 6 | 1 | 0 | 28 | 5 | 5 | 0 |

| A | 69 | 62 | 70 | 68 | 45 | 67 | 62 | 72 | |

| B- | 0 | 2 | 1 | 4 | 0 | 1 | 4 | 1 | |

| B+ | 4 | 1 | 1 | 1 | 0 | 0 | 1 | 0 | |

| C- | 0 | 2 | 0 | 0 | 0 | 0 | 1 | 0 | |

| C+ | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| 11 | N/A | 0 | 18 | 22 | 3 | 66 | 23 | 32 | 0 |

| A | 71 | 50 | 50 | 67 | 8 | 47 | 39 | 73 | |

| B- | 1 | 2 | 0 | 3 | 0 | 3 | 2 | 1 | |

| B+ | 2 | 3 | 2 | 1 | 0 | 1 | 0 | 0 | |

| C- | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | |

| C+ | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 |

| Grade | DIF Category | Female Male | Asian White | Black White | Hispanic White | Native American White | IEP Non-IEP | LEP Non-LEP | Title 1 Non-Title 1 |

|---|---|---|---|---|---|---|---|---|---|

| 3 | N/A | 0 | 0 | 0 | 0 | 29 | 0 | 0 | 0 |

| A | 36 | 32 | 33 | 35 | 7 | 36 | 36 | 36 | |

| B- | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| B+ | 0 | 4 | 2 | 1 | 0 | 0 | 0 | 0 | |

| C- | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | |

| C+ | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| 4 | N/A | 0 | 3 | 1 | 0 | 28 | 0 | 0 | 0 |

| A | 66 | 57 | 64 | 66 | 37 | 67 | 66 | 67 | |

| B- | 0 | 2 | 0 | 0 | 0 | 0 | 0 | 0 | |

| B+ | 1 | 4 | 2 | 1 | 2 | 0 | 1 | 0 | |

| C- | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| C+ | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | |

| 5 | N/A | 0 | 0 | 2 | 0 | 29 | 0 | 0 | 0 |

| A | 64 | 58 | 61 | 65 | 34 | 65 | 62 | 65 | |

| B- | 1 | 1 | 0 | 0 | 0 | 0 | 2 | 0 | |

| B+ | 0 | 4 | 2 | 0 | 2 | 0 | 1 | 0 | |

| C- | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| C+ | 0 | 2 | 0 | 0 | 0 | 0 | 0 | 0 | |

| 6 | N/A | 0 | 1 | 4 | 0 | 37 | 0 | 0 | 0 |

| A | 36 | 35 | 32 | 36 | 0 | 37 | 37 | 37 | |

| B- | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| B+ | 0 | 0 | 1 | 1 | 0 | 0 | 0 | 0 | |

| C- | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| C+ | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | |

| 7 | N/A | 0 | 2 | 1 | 0 | 31 | 0 | 1 | 0 |

| A | 34 | 29 | 32 | 33 | 3 | 33 | 33 | 34 | |

| B- | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | |

| B+ | 0 | 3 | 0 | 1 | 0 | 1 | 0 | 0 | |

| C- | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| C+ | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| 8 | N/A | 0 | 9 | 6 | 1 | 34 | 1 | 5 | 0 |

| A | 34 | 22 | 27 | 32 | 0 | 33 | 28 | 34 | |

| B- | 0 | 2 | 0 | 1 | 0 | 0 | 1 | 0 | |

| B+ | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | |

| C- | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | |

| C+ | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| 11 | N/A | 0 | 27 | 12 | 0 | 58 | 30 | 34 | 0 |

| A | 56 | 28 | 44 | 54 | 0 | 26 | 24 | 58 | |

| B- | 1 | 1 | 1 | 3 | 0 | 2 | 0 | 0 | |

| B+ | 1 | 2 | 1 | 1 | 0 | 0 | 0 | 0 | |

| C- | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| C+ | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Grade | DIF Category | Female Male | Asian White | Black White | Hispanic White | Native American White | IEP Non-IEP | LEP Non-LEP | Title 1 Non-Title 1 |

|---|---|---|---|---|---|---|---|---|---|

| 3 | N/A | 0 | 16 | 12 | 0 | 73 | 11 | 6 | 0 |

| A | 102 | 83 | 88 | 96 | 28 | 91 | 94 | 104 | |

| B- | 1 | 3 | 3 | 8 | 4 | 3 | 5 | 1 | |

| B+ | 1 | 2 | 2 | 0 | 0 | 0 | 0 | 0 | |

| C- | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | |

| C+ | 1 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | |

| 4 | N/A | 0 | 13 | 5 | 0 | 73 | 4 | 2 | 0 |

| A | 99 | 84 | 92 | 101 | 33 | 94 | 99 | 104 | |

| B- | 1 | 3 | 6 | 3 | 0 | 7 | 3 | 2 | |

| B+ | 4 | 5 | 2 | 1 | 0 | 1 | 0 | 0 | |

| C- | 0 | 0 | 0 | 1 | 0 | 0 | 2 | 0 | |

| C+ | 2 | 1 | 1 | 0 | 0 | 0 | 0 | 0 | |

| 5 | N/A | 0 | 23 | 10 | 0 | 79 | 7 | 9 | 0 |

| A | 100 | 75 | 89 | 98 | 24 | 95 | 89 | 103 | |

| B- | 1 | 5 | 6 | 4 | 2 | 3 | 4 | 1 | |

| B+ | 2 | 2 | 0 | 1 | 0 | 0 | 0 | 0 | |

| C- | 0 | 0 | 0 | 2 | 0 | 0 | 2 | 1 | |

| C+ | 2 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | |

| 6 | N/A | 0 | 13 | 3 | 0 | 68 | 3 | 6 | 0 |

| A | 93 | 77 | 90 | 93 | 29 | 91 | 82 | 95 | |

| B- | 1 | 3 | 3 | 2 | 0 | 2 | 7 | 2 | |

| B+ | 2 | 2 | 1 | 1 | 0 | 0 | 0 | 0 | |

| C- | 1 | 2 | 0 | 1 | 0 | 1 | 2 | 0 | |

| C+ | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| 7 | N/A | 0 | 5 | 1 | 0 | 67 | 2 | 2 | 0 |

| A | 100 | 94 | 98 | 102 | 38 | 101 | 94 | 104 | |

| B- | 1 | 2 | 5 | 3 | 1 | 3 | 10 | 2 | |

| B+ | 3 | 5 | 1 | 0 | 0 | 0 | 0 | 0 | |

| C- | 1 | 0 | 1 | 1 | 0 | 0 | 0 | 0 | |

| C+ | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| 8 | N/A | 0 | 10 | 3 | 0 | 68 | 2 | 8 | 0 |

| A | 88 | 77 | 93 | 93 | 29 | 89 | 72 | 95 | |

| B- | 4 | 4 | 2 | 3 | 1 | 7 | 15 | 3 | |

| B+ | 4 | 6 | 0 | 1 | 0 | 0 | 0 | 0 | |

| C- | 1 | 0 | 0 | 1 | 0 | 0 | 3 | 0 | |

| C+ | 1 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | |

| 11 | N/A | 0 | 23 | 27 | 0 | 92 | 31 | 46 | 0 |

| A | 91 | 70 | 68 | 92 | 6 | 66 | 47 | 96 | |

| B- | 5 | 3 | 2 | 6 | 0 | 1 | 3 | 2 | |

| B+ | 1 | 2 | 1 | 0 | 0 | 0 | 2 | 0 | |

| C- | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| C+ | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Grade | DIF Category | Female Male | Asian White | Black White | Hispanic White | Native American White | IEP Non-IEP | LEP Non-LEP | Title 1 Non-Title 1 |

|---|---|---|---|---|---|---|---|---|---|

| 3 | N/A | 0 | 14 | 0 | 0 | 112 | 0 | 0 | 0 |

| A | 114 | 85 | 102 | 106 | 4 | 112 | 108 | 114 | |

| B- | 1 | 2 | 7 | 7 | 0 | 4 | 3 | 2 | |

| B+ | 0 | 12 | 7 | 3 | 0 | 0 | 5 | 0 | |

| C- | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| C+ | 0 | 3 | 0 | 0 | 0 | 0 | 0 | 0 | |

| 4 | N/A | 0 | 1 | 2 | 0 | 90 | 0 | 0 | 0 |

| A | 105 | 98 | 101 | 106 | 19 | 102 | 104 | 108 | |

| B- | 2 | 2 | 3 | 1 | 0 | 7 | 2 | 1 | |

| B+ | 2 | 6 | 3 | 2 | 0 | 0 | 3 | 0 | |

| C- | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| C+ | 0 | 2 | 0 | 0 | 0 | 0 | 0 | 0 | |

| 5 | N/A | 0 | 4 | 9 | 0 | 71 | 0 | 2 | 0 |

| A | 86 | 76 | 75 | 87 | 17 | 84 | 82 | 88 | |

| B- | 3 | 1 | 3 | 1 | 0 | 5 | 5 | 1 | |

| B+ | 0 | 6 | 2 | 1 | 0 | 0 | 0 | 0 | |

| C- | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| C+ | 0 | 2 | 0 | 0 | 1 | 0 | 0 | 0 | |

| 6 | N/A | 0 | 4 | 14 | 0 | 105 | 4 | 1 | 0 |

| A | 112 | 98 | 95 | 110 | 11 | 104 | 109 | 114 | |

| B- | 0 | 1 | 3 | 2 | 0 | 8 | 3 | 1 | |

| B+ | 4 | 11 | 4 | 4 | 0 | 0 | 3 | 1 | |

| C- | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | |

| C+ | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | |

| 7 | N/A | 0 | 10 | 20 | 0 | 83 | 6 | 8 | 0 |

| A | 82 | 65 | 63 | 82 | 4 | 75 | 74 | 85 | |

| B- | 1 | 2 | 0 | 3 | 0 | 3 | 5 | 2 | |

| B+ | 3 | 8 | 4 | 2 | 0 | 3 | 0 | 0 | |

| C- | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| C+ | 0 | 2 | 0 | 0 | 0 | 0 | 0 | 0 | |

| 8 | N/A | 0 | 13 | 16 | 0 | 81 | 3 | 8 | 0 |

| A | 81 | 68 | 68 | 82 | 4 | 77 | 74 | 83 | |

| B- | 4 | 2 | 0 | 1 | 0 | 3 | 3 | 1 | |

| B+ | 0 | 2 | 1 | 1 | 0 | 2 | 0 | 0 | |

| C- | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 1 | |

| C+ | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| 11 | N/A | 24 | 74 | 53 | 27 | 126 | 82 | 89 | 24 |

| A | 87 | 37 | 71 | 92 | 0 | 41 | 31 | 101 | |

| B- | 5 | 2 | 0 | 4 | 0 | 1 | 3 | 1 | |

| B+ | 8 | 10 | 1 | 3 | 0 | 2 | 2 | 0 | |

| C- | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | |

| C+ | 1 | 3 | 0 | 0 | 0 | 0 | 1 | 0 |