Chapter 2 Reliability, Precision, and Errors of Measurement

2.1 Introduction

This chapter addresses the technical quality of operational test functioning with regard to precision and reliability. Part of the test validity argument is that scores must be consistent and precise enough to be useful for intended purposes. If scores are to be meaningful, tests should deliver the same results under repeated administrations to the same student or for students of the same ability. In addition, the range of certainty around the score should be small enough to support educational decisions. The reliability and precision of a test are examined through analysis of measurement error and other test properties in simulated and operational conditions. For example, the reliability of a test may be assessed in part by verifying that different test forms follow the same blueprint. In computer adaptive testing (CAT), one cannot expect the same set of items to be administered to the same examinee more than once. Consequently, reliability is inferred from internal test properties, including test length and the information provided by item parameters. Measurement precision is enhanced when the student receives items that are well matched, in terms of difficulty, to the overall performance level of the student. Measurement precision is also enhanced when the items a student receives work well together to measure the same general body of knowledge, skills, and abilities defined by the test blueprint. Smarter Balanced uses an adaptive model because adaptive tests are customized to each student in terms of the difficulty of the items. Smarter Balanced used item quality control procedures that ensure test items measure the knowledge, skills, and abilities specified in the test blueprint and work well together in this respect. The expected outcome of these and other test administration and item quality control procedures is high reliability and low measurement error.

Statistics in this chapter are based on simulated data or real data from the 2018-19 administration. For grades 3 to 8 in both subjects, real-data results were based on data from the following member jurisdictions: CA, DE, HI, ID, MT, NV, OR, SD, and VT. For high school, a single set of real-data results were computed across all high school grades tested. By high school grade, the states included in this chapter were: grade 11 - CA, HI, OR, and SD; grade 10 - ID and WA; grade 9 - VT.

2.2 Measurement Bias

Measurement bias is any systematic or non-random error that occurs in estimating a student’s achievement from the student’s scores on test items. Prior to the release of the 2018-19 item pool, simulation studies were conducted to ensure that the item pool, combined with the adaptive test administration algorithm, would produce satisfactory tests with regard to measurement bias and random measurement error as a function of student achievement, overall reliability, fulfillment of test blueprints, and item exposure.

Results for measurement bias are provided in this section. Measurement bias is the one index of test performance that is clearly and preferentially assessed through simulation as opposed to the use of real data. With real data, true student achievement is unknown. In simulation, true student achievement can be assumed and used to generate item responses. The simulated item responses are used in turn to estimate achievement. Achievement estimates are then compared to the underlying assumed, true values of student achievement to assess whether the estimates contain systematic error (bias).

The other areas of test performance originally assessed through simulation at the time the item pool was released for the 2018-19 administration will be addressed later in this chapter and in Chapter 4 with real data. Simulation results for these areas of test quality were useful at the time they were generated for benchmarking and predicting test quality. When evaluating the performance of a test in practice, results-based real data are preferable to those based on simulation.

Simulations for the 2018-19 administration were conducted by the American Institutes for Research (AIR). The simulations were performed for each grade within subject area for the standard item pool (English) and for accommodation item pools of braille and Spanish for mathematics and braille for ELA/literacy. The numbers of items in standard and accommodation item pools are reported in Chapter 3. The simulations were conducted only for the computer adaptive segment of the test.

For each simulation condition, 1,000 examinees were sampled from the hypothetical distributions of student achievement for each grade and subject. The hypothetical student achievement distributions were based on students’ operational scores in the 2016–17 Smarter Balanced summative tests administered in 12 member states plus the Virgin Islands and the Bureau of Indian Education. Table 2.1 shows the means and standard deviations (SD) of the student scale scores distributions used in the simulations.

| Grade | ELA/Literacy Mean | ELA/Literacy SD | Mathematics Mean | Mathematics SD |

|---|---|---|---|---|

| 3 | -0.874 | 1.038 | -0.934 | 1.069 |

| 4 | -0.379 | 1.117 | -0.419 | 1.085 |

| 5 | 0.069 | 1.120 | -0.064 | 1.177 |

| 6 | 0.301 | 1.125 | 0.173 | 1.353 |

| 7 | 0.601 | 1.169 | 0.379 | 1.417 |

| 8 | 0.803 | 1.164 | 0.562 | 1.547 |

| 11 | 1.341 | 1.230 | 0.768 | 1.498 |

Test events were created for the simulated examinees using the 2018-19 item pool. Estimated ability ( \(\hat{\theta}\) ) was calculated from the simulated tests using maximum likelihood estimation (MLE) as described in the Smarter Balanced Test Scoring Specifications (Smarter Balanced Assessment Consortium, 2020).

Bias was computed as:

\[\begin{equation} bias = N^{-1}\sum_{i = 1}^{N} (\theta_{i} - \hat{\theta}_{i}) \tag{2.1} \end{equation}\]

and the error variance of the estimated bias is:

\[\begin{equation} var(bias) = \frac{1}{N(N^{-1})}\sum_{i = 1}^{N} (\theta_{i} - \overline{\hat{\theta}}_{i})^{2} \tag{2.2} \end{equation}\]

where \(\overline{\hat{\theta}}\) equals the average of the \(\hat{\theta}_i\), and \(N\) denotes the number of simulees (\(N = 1000\) for all conditions). Statistical significance of the bias is tested using a z-test: \[\begin{equation} z = \frac{bias}{\sqrt{var(bias)}} \tag{2.3} \end{equation}\]

Table 2.2 and Table 2.3 show respectively for ELA/literacy and mathematics the bias in estimates of student achievement based on the complete test assembled from the regular item pool and the accommodations pools included in the simulations. The standard error of bias is the denominator of the z-score in equation Equation (2.3). The p-value is the probability \(|Z| > |z|\) where \(Z\) is a standard normal variate and \(|z|\) is the absolute value of the \(z\) computed in equation (2.3). Under the hypothesis of no bias, approximately 5% and 1% of the \(\theta_{i}\) will fall outside, respectively, 95% and 99% confidence intervals centered on \(\theta_{i}\).

Mean bias was generally very small in practical terms, exceeding .02 in absolute value in no cases for ELA/literacy and in only six cases for mathematics, four of which were in the Spanish pool. Due to the large sample sizes used in simulation, however, mean bias was statistically significant at the .05 level or higher for approximately one-third of the combinations of grade and pool in mathematics and one-fifth of the combinations of grade and pool in ELA/literacy. In virtually all cases, the percentage of simulated examinees whose estimated achievement score fell outside the confidence intervals centered on their true score was close to expected values of 5% for the 95% confidence interval and 1% for the 99% confidence interval. Plots of bias by estimated theta, in the full AIR simulation report (American Institutes for Research, 2018) show that positive and statistically significant mean bias was due to thetas being underestimated in regions of student achievement far below the lowest cut score (separating achievement levels 1 and 2). The same plots show that estimation bias is negligible near all cut scores in all cases.

| Pool | Grade | Mean Bias | SE (Bias) | P value | MSE | 95% CI Miss Rate | 99% CI Miss Rate |

|---|---|---|---|---|---|---|---|

| Standard | 3 | 0.00 | 0.01 | 0.46 | 0.10 | 4.8% | 0.8% |

| 4 | -0.02 | 0.01 | 0.00 | 0.12 | 4.5% | 0.7% | |

| 5 | -0.01 | 0.01 | 0.04 | 0.12 | 4.9% | 1.0% | |

| 6 | 0.01 | 0.01 | 0.41 | 0.13 | 5.2% | 0.9% | |

| 7 | -0.01 | 0.01 | 0.37 | 0.15 | 5.4% | 1.0% | |

| 8 | 0.01 | 0.01 | 0.40 | 0.15 | 4.8% | 0.9% | |

| 11 | 0.00 | 0.01 | 0.74 | 0.17 | 5.2% | 1.0% | |

| Braille | 3 | 0.01 | 0.01 | 0.22 | 0.12 | 5.2% | 1.1% |

| 4 | 0.02 | 0.01 | 0.04 | 0.11 | 3.6% | 0.3% | |

| 5 | -0.01 | 0.01 | 0.24 | 0.12 | 4.5% | 0.6% | |

| 6 | -0.02 | 0.01 | 0.12 | 0.13 | 4.8% | 0.9% | |

| 7 | 0.00 | 0.01 | 0.89 | 0.17 | 5.3% | 0.9% | |

| 8 | -0.01 | 0.01 | 0.54 | 0.18 | 6.2% | 1.6% | |

| 11 | 0.00 | 0.01 | 0.74 | 0.20 | 5.4% | 0.8% |

| Pool | Grade | Mean Bias | SE (Bias) | P value | MSE | 95% CI Miss Rate | 99% CI Miss Rate |

|---|---|---|---|---|---|---|---|

| Standard | 3 | 0.00 | 0.00 | 0.82 | 0.07 | 5.6% | 0.9% |

| 4 | 0.00 | 0.00 | 0.87 | 0.07 | 5.1% | 1.0% | |

| 5 | 0.01 | 0.01 | 0.06 | 0.10 | 4.8% | 0.9% | |

| 6 | 0.01 | 0.01 | 0.14 | 0.11 | 5.0% | 1.0% | |

| 7 | 0.01 | 0.01 | 0.07 | 0.14 | 4.7% | 0.8% | |

| 8 | 0.02 | 0.01 | <0.001 | 0.14 | 4.5% | 0.7% | |

| 11 | 0.02 | 0.01 | <0.001 | 0.19 | 4.6% | 1.0% | |

| Braille | 3 | 0.01 | 0.01 | 0.41 | 0.08 | 5.1% | 0.9% |

| 4 | 0.01 | 0.01 | 0.51 | 0.08 | 4.1% | 0.4% | |

| 5 | 0.02 | 0.01 | 0.08 | 0.11 | 4.7% | 1.0% | |

| 6 | 0.00 | 0.01 | 0.99 | 0.15 | 4.6% | 0.9% | |

| 7 | 0.02 | 0.01 | 0.1 | 0.14 | 4.1% | 0.5% | |

| 8 | 0.03 | 0.01 | 0.02 | 0.20 | 4.3% | 0.7% | |

| 11 | 0.04 | 0.01 | <0.001 | 0.32 | 4.2% | 0.7% | |

| Spanish | 3 | 0.00 | 0.01 | 0.55 | 0.06 | 4.0% | 0.7% |

| 4 | 0.00 | 0.01 | 0.99 | 0.09 | 5.2% | 1.7% | |

| 5 | 0.05 | 0.01 | <0.001 | 0.12 | 5.4% | 0.8% | |

| 6 | 0.02 | 0.01 | 0.19 | 0.17 | 6.5% | 0.8% | |

| 7 | 0.04 | 0.01 | 0.01 | 0.19 | 4.5% | 1.0% | |

| 8 | 0.04 | 0.01 | <0.001 | 0.19 | 5.0% | 0.9% | |

| 11 | 0.05 | 0.01 | <0.001 | 0.30 | 4.7% | 1.1% |

2.3 Reliability

Reliability estimates reported in this section are derived from internal, IRT-based estimates of the measurement error in the test scores of examinees (MSE) and the observed variance of examinees’ test scores on the \(\theta\)-scale \((var(\hat{\theta}))\). The formula for the reliability estimate (rho) is:

\[\begin{equation} \hat{\rho} = 1 - \frac{MSE}{var(\hat{\theta})}. \tag{2.4} \end{equation}\]

According to Smarter Balanced Scoring Specifications (Smarter Balanced Assessment Consortium, 2020), estimates of measurement error are obtained from the parameter estimates of the items taken by the examinees. This is done by computing the test information for each examinee \(i\) as:

\[\begin{equation} \begin{split} I(\hat{\theta}_{i}) = \sum_{j=1}^{I}D^2a_{j}^2 (\frac{\sum_{l=1}^{m_{j}}l^2Exp(\sum_{k=1}^{l}Da_{j}(\hat{\theta}-b_{jk}))} {1+\sum_{l=1}^{m_{j}}Exp(\sum_{k=1}^{l}Da_{j}(\hat{\theta}-b_{jk}))} - \\ (\frac{\sum_{l=1}^{m_{j}}lExp(\sum_{k=1}^{l}Da_{j}(\hat{\theta}-b_{jk}))} {1+\sum_{l=1}^{m_{j}}Exp(\sum_{k=1}^{l}Da_{j}(\hat{\theta}-b_{jk}))})^2) \end{split} \tag{2.5} \end{equation}\]

where \(m_j\) is the maximum possible score point (starting from 0) for the \(j\)th item, and \(D\) is the scale factor, 1.7. Values of \(a_j\) and \(b_jk\) are item parameters for item \(j\) and score level \(k\). The test information is computed using only the items answered by the examinee. The measurement error (SEM) for examinee \(i\) is then computed as:

\[\begin{equation} SEM(\hat{\theta_i}) = \frac{1}{\sqrt{I(\hat{\theta_i})}}. \tag{2.6} \end{equation}\]

The upper bound of \(SEM(\hat{\theta_i})\) is set to 2.5. Any value larger than 2.5 is truncated at 2.5. The mean squared error for a group of \(N\) examinees is then:

\[\begin{equation} MSE = N^{-1}\sum_{i=1}^N SEM(\hat{\theta_i})^2 \tag{2.7} \end{equation}\]

and the variance of the achievement scores is: \[\begin{equation} var(\hat{\theta}) = N^{-1}\sum_{i=1}^N SEM(\hat{\theta_i} - \overline{\hat{\theta}})^2 \tag{2.8} \end{equation}\]

where \(\overline{\hat{\theta}}\) is the average of the \(\hat{\theta_i}\).

The measurement error for a group of examinees is typically reported as the square root of \(MSE\) and is denoted \(RMSE\). Measurement error is computed with equation (2.6) and equation (2.7) on a scale where achievement has a standard deviation close to 1 among students at a given grade. Measurement error reported in the tables of this section is transformed to the reporting scale by multiplying the theta-scale measurement error by \(a\), where \(a\) is the slope used to convert estimates of student achievement on the \(\theta\)-scale to the reporting scale. The transformation equations for converting estimates of student achievement on the \(\theta\)-scale to the reporting scale are given in Chapter 5.

2.3.1 General Population

Reliability estimates in this section are based on real data as described above. Table 2.4 and Table 2.5 show the reliability of the observed total scores and subscores for ELA/literacy and mathematics. Reliability estimates are very high for the total score in both subjects. Reliability coefficients are high for the claim 1 score in mathematics, moderately high for the claim 1 and claim 2 scores in ELA/literacy, and moderately high to moderate for the remainder of the claim scores in both subjects. The lowest reliability coefficient in either subject is .515, which is the reliability of the claim 3 score in the grade 7 ELA/literacy assessment.

| Grade | N | Total score | Claim 1 | Claim 2 | Claim 3 | Claim 4 |

|---|---|---|---|---|---|---|

| 3 | 665,066 | 0.927 | 0.744 | 0.696 | 0.559 | 0.696 |

| 4 | 658,469 | 0.922 | 0.751 | 0.693 | 0.561 | 0.702 |

| 5 | 687,363 | 0.930 | 0.737 | 0.700 | 0.577 | 0.739 |

| 6 | 689,576 | 0.926 | 0.750 | 0.713 | 0.545 | 0.702 |

| 7 | 694,279 | 0.927 | 0.766 | 0.710 | 0.515 | 0.703 |

| 8 | 679,252 | 0.927 | 0.737 | 0.704 | 0.541 | 0.709 |

| HS | 616,690 | 0.926 | 0.757 | 0.712 | 0.529 | 0.685 |

| Grade | N | Total score | Claim 1 | Claim 2/4 | Claim 3 |

|---|---|---|---|---|---|

| 3 | 666,257 | 0.949 | 0.902 | 0.622 | 0.731 |

| 4 | 660,615 | 0.947 | 0.900 | 0.677 | 0.706 |

| 5 | 689,520 | 0.941 | 0.895 | 0.594 | 0.673 |

| 6 | 689,874 | 0.941 | 0.884 | 0.645 | 0.690 |

| 7 | 696,711 | 0.939 | 0.892 | 0.620 | 0.632 |

| 8 | 678,469 | 0.939 | 0.892 | 0.649 | 0.676 |

| HS | 634,358 | 0.925 | 0.882 | 0.567 | 0.585 |

2.3.2 Demographic Groups

Reliability estimates in this section are based on real data as described above. Table 2.6 and Table 2.7 show the reliability of the test for students of different racial groups in ELA/literacy and mathematics. Table 2.8 and Table 2.9 show the reliability of the test for students grouped by demographics typically requiring accommodations or accessibility tools.

Because of the differences in average score across demographic groups and the relationship between measurement error and student achievement scores, which will be seen in the next section of this chapter, demographic groups with lower average scores tend to have lower reliability than the population as a whole. Still, the reliability coefficients for all demographic groups in these tables are moderately high (.80 to .9) to high (.9 or higher).

| Grade | Group | N | Var | MSE | Rho |

|---|---|---|---|---|---|

| 3 | All | 665,066 | 8346 | 613 | 0.927 |

| American Indian or Alaska Native | 9,312 | 7251 | 696 | 0.904 | |

| Asian | 56,066 | 7931 | 591 | 0.925 | |

| Black or African American | 37,743 | 7652 | 648 | 0.915 | |

| Hispanic or Latino Ethnicity | 297,795 | 7411 | 614 | 0.917 | |

| White | 226,623 | 7711 | 607 | 0.921 | |

| 4 | All | 658,469 | 9358 | 726 | 0.922 |

| American Indian or Alaska Native | 9,743 | 8167 | 772 | 0.905 | |

| Asian | 54,137 | 8740 | 728 | 0.917 | |

| Black or African American | 37,869 | 8824 | 745 | 0.916 | |

| Hispanic or Latino Ethnicity | 296,059 | 8401 | 725 | 0.914 | |

| White | 226,229 | 8406 | 720 | 0.914 | |

| 5 | All | 687,363 | 9701 | 682 | 0.930 |

| American Indian or Alaska Native | 10,468 | 8566 | 715 | 0.917 | |

| Asian | 58,030 | 8946 | 721 | 0.919 | |

| Black or African American | 39,610 | 8995 | 681 | 0.924 | |

| Hispanic or Latino Ethnicity | 310,245 | 8624 | 662 | 0.923 | |

| White | 233,446 | 8622 | 695 | 0.919 | |

| 6 | All | 689,576 | 9426 | 701 | 0.926 |

| American Indian or Alaska Native | 10,792 | 8581 | 780 | 0.909 | |

| Asian | 57,981 | 8300 | 698 | 0.916 | |

| Black or African American | 39,198 | 8947 | 736 | 0.918 | |

| Hispanic or Latino Ethnicity | 311,629 | 8376 | 690 | 0.918 | |

| White | 235,363 | 8344 | 707 | 0.915 | |

| 7 | All | 694,279 | 10640 | 773 | 0.927 |

| American Indian or Alaska Native | 10,670 | 9574 | 833 | 0.913 | |

| Asian | 58,914 | 9105 | 784 | 0.914 | |

| Black or African American | 38,824 | 10121 | 801 | 0.921 | |

| Hispanic or Latino Ethnicity | 316,635 | 9613 | 762 | 0.921 | |

| White | 233,937 | 9157 | 776 | 0.915 | |

| 8 | All | 679,252 | 10699 | 778 | 0.927 |

| American Indian or Alaska Native | 10,493 | 9379 | 849 | 0.909 | |

| Asian | 60,539 | 9438 | 790 | 0.916 | |

| Black or African American | 38,167 | 10040 | 814 | 0.919 | |

| Hispanic or Latino Ethnicity | 305,349 | 9448 | 768 | 0.919 | |

| White | 231,459 | 9408 | 779 | 0.917 | |

| HS | All | 616,690 | 13452 | 999 | 0.926 |

| American Indian or Alaska Native | 10,072 | 11464 | 1041 | 0.909 | |

| Asian | 57,081 | 11833 | 990 | 0.916 | |

| Black or African American | 31,066 | 13319 | 1063 | 0.920 | |

| Hispanic or Latino Ethnicity | 272,850 | 12450 | 997 | 0.920 | |

| White | 217,094 | 11683 | 981 | 0.916 |

| Grade | Group | N | Var | MSE | Rho |

|---|---|---|---|---|---|

| 3 | All | 666,257 | 7060 | 357 | 0.949 |

| American Indian or Alaska Native | 9,275 | 6135 | 403 | 0.934 | |

| Asian | 56,332 | 6419 | 347 | 0.946 | |

| Black or African American | 37,647 | 6500 | 392 | 0.940 | |

| Hispanic or Latino Ethnicity | 299,131 | 6161 | 365 | 0.941 | |

| White | 225,912 | 6414 | 342 | 0.947 | |

| 4 | All | 660,615 | 7381 | 389 | 0.947 |

| American Indian or Alaska Native | 9,718 | 6329 | 440 | 0.931 | |

| Asian | 54,527 | 6733 | 376 | 0.944 | |

| Black or African American | 37,834 | 6821 | 450 | 0.934 | |

| Hispanic or Latino Ethnicity | 297,418 | 6339 | 407 | 0.936 | |

| White | 226,312 | 6566 | 360 | 0.945 | |

| 5 | All | 689,520 | 9037 | 534 | 0.941 |

| American Indian or Alaska Native | 10,453 | 7714 | 641 | 0.917 | |

| Asian | 58,444 | 8176 | 438 | 0.946 | |

| Black or African American | 39,552 | 7932 | 658 | 0.917 | |

| Hispanic or Latino Ethnicity | 311,353 | 7575 | 586 | 0.923 | |

| White | 233,571 | 8087 | 471 | 0.942 | |

| 6 | All | 689,874 | 11686 | 690 | 0.941 |

| American Indian or Alaska Native | 10,621 | 9825 | 833 | 0.915 | |

| Asian | 58,054 | 10181 | 552 | 0.946 | |

| Black or African American | 38,976 | 10870 | 905 | 0.917 | |

| Hispanic or Latino Ethnicity | 312,465 | 10130 | 768 | 0.924 | |

| White | 234,930 | 9932 | 585 | 0.941 | |

| 7 | All | 696,711 | 13304 | 818 | 0.939 |

| American Indian or Alaska Native | 10,583 | 10796 | 957 | 0.911 | |

| Asian | 59,232 | 12156 | 602 | 0.950 | |

| Black or African American | 38,701 | 11141 | 1063 | 0.905 | |

| Hispanic or Latino Ethnicity | 317,895 | 11121 | 939 | 0.916 | |

| White | 235,035 | 11214 | 671 | 0.940 | |

| 8 | All | 678,469 | 15541 | 941 | 0.939 |

| American Indian or Alaska Native | 10,410 | 11797 | 1070 | 0.909 | |

| Asian | 60,226 | 14761 | 702 | 0.952 | |

| Black or African American | 37,934 | 12413 | 1201 | 0.903 | |

| Hispanic or Latino Ethnicity | 305,972 | 12735 | 1065 | 0.916 | |

| White | 230,527 | 13396 | 800 | 0.940 | |

| HS | All | 634,358 | 16282 | 1226 | 0.925 |

| American Indian or Alaska Native | 10,148 | 11398 | 1439 | 0.874 | |

| Asian | 57,909 | 16924 | 804 | 0.952 | |

| Black or African American | 31,575 | 12571 | 1618 | 0.871 | |

| Hispanic or Latino Ethnicity | 277,302 | 12987 | 1404 | 0.892 | |

| White | 226,582 | 14569 | 1064 | 0.927 |

| Grade | Group | N | Var | MSE | Rho |

|---|---|---|---|---|---|

| 3 | All | 665,066 | 8346 | 613 | 0.927 |

| LEP Status | 129,216 | 5703 | 670 | 0.883 | |

| Section 504 Status | 8,064 | 7788 | 615 | 0.921 | |

| Economic Disadvantage Status | 381,471 | 7380 | 623 | 0.916 | |

| IDEA Indicator | 79,502 | 7897 | 732 | 0.907 | |

| 4 | All | 658,469 | 9358 | 726 | 0.922 |

| LEP Status | 127,624 | 6232 | 769 | 0.877 | |

| Section 504 Status | 10,031 | 8394 | 726 | 0.913 | |

| Economic Disadvantage Status | 378,584 | 8429 | 730 | 0.913 | |

| IDEA Indicator | 82,667 | 8958 | 831 | 0.907 | |

| 5 | All | 687,363 | 9701 | 682 | 0.930 |

| LEP Status | 111,611 | 5708 | 701 | 0.877 | |

| Section 504 Status | 12,350 | 8561 | 683 | 0.920 | |

| Economic Disadvantage Status | 398,816 | 8721 | 670 | 0.923 | |

| IDEA Indicator | 87,018 | 8717 | 761 | 0.913 | |

| 6 | All | 689,576 | 9426 | 701 | 0.926 |

| LEP Status | 93,591 | 5493 | 784 | 0.857 | |

| Section 504 Status | 14,239 | 7925 | 690 | 0.913 | |

| Economic Disadvantage Status | 394,118 | 8520 | 704 | 0.917 | |

| IDEA Indicator | 83,673 | 7899 | 845 | 0.893 | |

| 7 | All | 694,279 | 10640 | 773 | 0.927 |

| LEP Status | 85,437 | 6124 | 869 | 0.858 | |

| Section 504 Status | 15,153 | 9039 | 764 | 0.915 | |

| Economic Disadvantage Status | 391,563 | 9723 | 774 | 0.920 | |

| IDEA Indicator | 81,099 | 8448 | 908 | 0.892 | |

| 8 | All | 679,252 | 10699 | 778 | 0.927 |

| LEP Status | 73,989 | 5466 | 895 | 0.836 | |

| Section 504 Status | 16,145 | 9352 | 770 | 0.918 | |

| Economic Disadvantage Status | 376,294 | 9610 | 781 | 0.919 | |

| IDEA Indicator | 77,449 | 7732 | 930 | 0.880 | |

| HS | All | 616,690 | 13452 | 999 | 0.926 |

| LEP Status | 52,303 | 7146 | 1247 | 0.825 | |

| Section 504 Status | 18,062 | 11899 | 971 | 0.918 | |

| Economic Disadvantage Status | 319,888 | 12699 | 1010 | 0.920 | |

| IDEA Indicator | 59,337 | 10012 | 1215 | 0.879 |

| Grade | Group | N | Var | MSE | Rho |

|---|---|---|---|---|---|

| 3 | All | 666,257 | 7060 | 357 | 0.949 |

| LEP Status | 131,725 | 5582 | 400 | 0.928 | |

| Section 504 Status | 8,182 | 6467 | 355 | 0.945 | |

| Economic Disadvantage Status | 382,862 | 6286 | 370 | 0.941 | |

| IDEA Indicator | 79,304 | 8205 | 468 | 0.943 | |

| 4 | All | 660,615 | 7381 | 389 | 0.947 |

| LEP Status | 130,192 | 5391 | 459 | 0.915 | |

| Section 504 Status | 10,192 | 6469 | 373 | 0.942 | |

| Economic Disadvantage Status | 380,032 | 6497 | 411 | 0.937 | |

| IDEA Indicator | 82,427 | 8133 | 568 | 0.930 | |

| 5 | All | 689,520 | 9037 | 534 | 0.941 |

| LEP Status | 113,909 | 5867 | 730 | 0.876 | |

| Section 504 Status | 12,503 | 8035 | 507 | 0.937 | |

| Economic Disadvantage Status | 400,218 | 7793 | 592 | 0.924 | |

| IDEA Indicator | 86,828 | 8431 | 834 | 0.901 | |

| 6 | All | 689,874 | 11686 | 690 | 0.941 |

| LEP Status | 95,399 | 8117 | 1083 | 0.867 | |

| Section 504 Status | 14,250 | 9753 | 630 | 0.935 | |

| Economic Disadvantage Status | 394,690 | 10380 | 782 | 0.925 | |

| IDEA Indicator | 82,577 | 10660 | 1312 | 0.877 | |

| 7 | All | 696,711 | 13304 | 818 | 0.939 |

| LEP Status | 87,499 | 8320 | 1334 | 0.840 | |

| Section 504 Status | 15,270 | 11066 | 731 | 0.934 | |

| Economic Disadvantage Status | 392,708 | 11363 | 944 | 0.917 | |

| IDEA Indicator | 80,519 | 10217 | 1457 | 0.857 | |

| 8 | All | 678,469 | 15541 | 941 | 0.939 |

| LEP Status | 75,672 | 8891 | 1476 | 0.834 | |

| Section 504 Status | 16,170 | 13120 | 890 | 0.932 | |

| Economic Disadvantage Status | 376,448 | 13070 | 1072 | 0.918 | |

| IDEA Indicator | 76,639 | 10247 | 1548 | 0.849 | |

| HS | All | 634,358 | 16282 | 1226 | 0.925 |

| LEP Status | 53,330 | 9435 | 2157 | 0.771 | |

| Section 504 Status | 19,327 | 14088 | 1161 | 0.918 | |

| Economic Disadvantage Status | 328,368 | 13504 | 1402 | 0.896 | |

| IDEA Indicator | 58,855 | 9589 | 2251 | 0.765 |

2.3.3 Paper/Pencil Tests

Smarter Balanced supports fixed-form paper/pencil tests for use in a variety of situations, including schools that lack computer capacity and to address potential religious concerns associated with using technology for assessments. Scores on the paper/pencil tests are on the same reporting scale that is used for the online assessments. The forms used in the 2018-19 administration are collectively (for all grades) referred to as Form 4. Table 2.10 and Table 2.11 show, for ELA/literacy and mathematics respectively, statistical information pertaining to the items on Form 4 and to the measurement precision of this form at each grade within subject. MSE estimates for the paper and pencil forms were based on equation (2.5) through equation (2.7), except that quadrature points and weights over a hypothetical theta distribution were used instead of observed scores (theta_hats). The hypothetical true score distribution used for quadrature was the student distribution from the 2014–2015 operational administration. Reliability was then computed as in equation (2.4) with the observed-score variance equal to the MSE plus the variance of the hypothetical true score distribution. Reliability was better for the full test than for subscales and is inversely related to the SEM.

| Grade | Nitems | Rho | SEM | Avg. b | Avg. a | C1 Rho | C1 SEM | C2 Rho | C2 SEM | C3 Rho | C3 SEM | C4 Rho | C4 SEM |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 3 | 41 | 0.916 | 0.306 | -0.734 | 0.800 | 0.806 | 0.499 | 0.720 | 0.633 | 0.619 | 0.796 | 0.695 | 0.672 |

| 4 | 41 | 0.907 | 0.343 | -0.115 | 0.682 | 0.768 | 0.590 | 0.705 | 0.693 | 0.633 | 0.817 | 0.691 | 0.716 |

| 5 | 41 | 0.918 | 0.324 | 0.275 | 0.709 | 0.741 | 0.641 | 0.777 | 0.581 | 0.634 | 0.823 | 0.725 | 0.668 |

| 6 | 40 | 0.913 | 0.328 | 0.804 | 0.708 | 0.689 | 0.715 | 0.778 | 0.568 | 0.662 | 0.761 | 0.628 | 0.819 |

| 7 | 39 | 0.918 | 0.334 | 0.839 | 0.689 | 0.766 | 0.617 | 0.779 | 0.595 | 0.666 | 0.791 | 0.695 | 0.740 |

| 8 | 43 | 0.917 | 0.332 | 1.161 | 0.664 | 0.780 | 0.586 | 0.769 | 0.606 | 0.645 | 0.819 | 0.629 | 0.848 |

| 11 | 42 | 0.930 | 0.346 | 1.228 | 0.670 | 0.820 | 0.590 | 0.780 | 0.670 | 0.704 | 0.818 | 0.730 | 0.766 |

| Grade | Nitems | Rho | SEM | Avg. b | Avg. a | C1 Rho | C1 SEM | C2&4 Rho | C2&4 SEM | C3 Rho | C3 SEM |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 3 | 40 | 0.925 | 0.284 | -0.900 | 0.898 | 0.842 | 0.433 | 0.594 | 0.826 | 0.747 | 0.582 |

| 4 | 40 | 0.924 | 0.292 | -0.282 | 0.876 | 0.848 | 0.431 | 0.570 | 0.886 | 0.747 | 0.592 |

| 5 | 39 | 0.914 | 0.345 | 0.166 | 0.843 | 0.846 | 0.479 | 0.453 | 1.237 | 0.699 | 0.738 |

| 6 | 39 | 0.908 | 0.406 | 0.659 | 0.788 | 0.816 | 0.606 | 0.645 | 0.945 | 0.643 | 0.949 |

| 7 | 40 | 0.899 | 0.455 | 1.131 | 0.713 | 0.812 | 0.653 | 0.564 | 1.193 | 0.692 | 0.906 |

| 8 | 39 | 0.907 | 0.465 | 1.267 | 0.646 | 0.848 | 0.614 | 0.440 | 1.637 | 0.647 | 1.071 |

| 11 | 41 | 0.914 | 0.478 | 0.949 | 0.588 | 0.851 | 0.651 | 0.460 | 1.688 | 0.760 | 0.875 |

2.4 Classification Accuracy

Information on classification accuracy is based on actual test results from the 2018-19 administration. Classification accuracy is a measure of how accurately test scores or subscores place students into reporting category levels. The likelihood of inaccurate placement depends on the amount of measurement error associated with scores, especially those nearest cut points, and on the distribution of student achievement. For this report, classification accuracy was calculated in the following manner. For each examinee, analysts used the estimated scale score and its standard error of measurement to obtain a normal approximation of the likelihood function over the range of scale scores. The normal approximation took the scale score estimate as its mean and the standard error of measurement as its standard deviation. The proportion of the area under the curve within each level was then calculated.

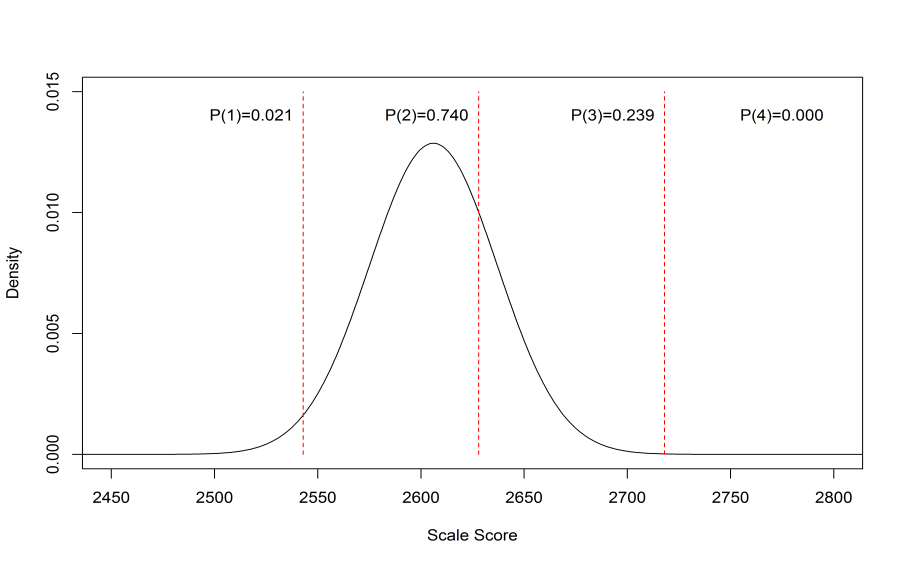

Figure 2.1 illustrates the approach for one examinee in grade 11 mathematics. In this example, the examinee’s overall scale score is 2606 (placing this student in level 2, based on the cut scores for this grade level), with a standard error of measurement of 31 points. Accordingly, a normal distribution with a mean of 2606 and a standard deviation of 31 was used to approximate the likelihood of the examinee’s true level, based on the observed test performance. The area under the curve was computed within each score range in order to estimate the probability that the examinee’s true score falls within that level (the red vertical lines identify the cut scores). For the student in Figure 2.1, the estimated probabilities were 2.1% for level 1, 74.0% for level 2, 23.9% for level 3, and 0.0% for level 4. Since the student’s assigned level was level 2, there is an estimated 74% chance the student was correctly classified and a 26% (2.1% + 23.9% + 0.0%) chance the student was misclassified.

Figure 2.1: Illustrative Example of a Normal Distribution Used to Calculate Classification Accuracy

The same procedure was then applied to all students within the sample. Results are shown for 10 cases in Table 2.12 (student 6 is the case illustrated in Figure 2.1).

| Student | SS | SEM | Level | P(L1) | P(L2) | P(L3) | P(L4) |

|---|---|---|---|---|---|---|---|

| 1 | 2751 | 23 | 4 | 0 | 0 | 0.076 | 0.924 |

| 2 | 2375 | 66 | 1 | 0.995 | 0.005 | 0 | 0 |

| 3 | 2482 | 42 | 1 | 0.927 | 0.073 | 0 | 0 |

| 4 | 2529 | 37 | 1 | 0.647 | 0.349 | 0.004 | 0 |

| 5 | 2524 | 36 | 1 | 0.701 | 0.297 | 0.002 | 0 |

| 6 | 2606 | 31 | 2 | 0.021 | 0.74 | 0.239 | 0 |

| 7 | 2474 | 42 | 1 | 0.95 | 0.05 | 0 | 0 |

| 8 | 2657 | 26 | 3 | 0 | 0.132 | 0.858 | 0.009 |

| 9 | 2600 | 31 | 2 | 0.033 | 0.784 | 0.183 | 0 |

| 10 | 2672 | 23 | 3 | 0 | 0.028 | 0.949 | 0.023 |

| … | … | … | … | … | … | … | … |

Table 2.13 presents a hypothetical set of results for the overall score and for a claim score (claim 3) for a population of students. The number (N) and proportion (P) of students classified into each achievement level is shown in the first three columns. These are counts and proportions of “observed” classifications in the population. Students are classified into the four achievement levels by their overall score. By claim scores, students are classified as “below,” “near,” or “above” standard, where the standard is the level 3 cut score. Rules for classifying students by their claim scores are detailed in Chapter 7.

The next four columns (“Freq L1,” etc.) show the number of students by “true level” among students at a given “observed level.” The last four columns convert the frequencies by true level into proportions. The sum of proportions in the last four columns of the “Overall” section of the table equals 1.0. Likewise, the sum of proportions in the last four columns of the “Claim 3” section of the table equals 1.0. For the overall test, the proportions of correct classifications for this hypothetical example are .404, .180, .145, and .098 for levels 1 through 4, respectively.

| Score | Observed Level | N | P | Freq L1 | Freq L2 | Freq L3 | Freq L4 | Prop L1 | Prop L2 | Prop L3 | Prop L4 |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Overall | Level 1 | 251,896 | 0.451 | 225,454 | 26,172 | 263 | 8 | 0.404 | 0.047 | 0.000 | 0.000 |

| Level 2 | 141,256 | 0.253 | 21,800 | 100,364 | 19,080 | 11 | 0.039 | 0.180 | 0.034 | 0.000 | |

| Level 3 | 104,125 | 0.186 | 161 | 14,223 | 81,089 | 8,652 | 0.000 | 0.025 | 0.145 | 0.015 | |

| Level 4 | 61,276 | 0.110 | 47 | 29 | 6,452 | 54,748 | 0.000 | 0.000 | 0.012 | 0.098 | |

| Claim 3 | Below Standard | 167,810 | 0.300 | 143,536 | 18,323 | 4,961 | 990 | 0.257 | 0.033 | 0.009 | 0.002 |

| Near Standard | 309,550 | 0.554 | 93,364 | 102,133 | 89,696 | 24,357 | 0.167 | 0.183 | 0.161 | 0.044 | |

| Above Standard | 81,193 | 0.145 | 94 | 1,214 | 18,949 | 60,936 | 0.000 | 0.002 | 0.034 | 0.109 |

For claim scores, correct “below” classifications are represented in cells corresponding to the “below standard” row and the levels 1 and 2 columns. Both levels 1 and 2 are below the level 3 cut score, which is the standard. Similarly, correct “above” standard classifications are represented in cells corresponding to the “above standard” row and the levels 3 and 4 columns. Correct classifications for “near” standard are not computed. There is no absolute criterion or scale score range, such as is defined by cut scores, for determining whether a student is truly at or near the standard. That is, the standard (level 3 cut score) clearly defines whether a student is above or below standard, but there is no range centered on the standard for determining whether a student is “near.”

Table 2.14 shows more specifically how the proportion of correct classifications is computed for classifications based on students’ overall and claim scores. For each type of score (overall and claim), the proportion of correct classifications is computed overall and conditionally on each observed classification (except for the “near standard” claim score classification). The conditional proportion correct is the proportion correct within a row divided by the total proportion within a row. For the overall score, the overall proportion correct is the sum of the proportions correct within the overall table section.

| Score | Observed Level | P | Prop L1 | Prop L2 | Prop L3 | Prop L4 | Accuracy by level | Accuracy overall |

|---|---|---|---|---|---|---|---|---|

| Overall | Level 1 | 0.451 | 0.404 | 0.047 | 0.000 | 0.000 | .404/.451=.895 | (.404+.180+.145+.098)/1.000=.827 |

| Level 2 | 0.253 | 0.039 | 0.180 | 0.034 | 0.000 | .180/.253=.711 | ||

| Level 3 | 0.186 | 0.000 | 0.025 | 0.145 | 0.015 | .145/.186=.779 | ||

| Level 4 | 0.110 | 0.000 | 0.000 | 0.012 | 0.098 | .098/.110=.893 | ||

| Claim 3 | Below Standard | 0.300 | 0.257 | 0.033 | 0.009 | 0.002 | (.257+.033)/.300=.965 | (.257+.033+.034+.109)/(.300+.145)=.971 |

| Near Standard | 0.554 | 0.167 | 0.183 | 0.161 | 0.044 | NA | ||

| Above Standard | 0.145 | 0.000 | 0.002 | 0.034 | 0.109 | (.034+.109)/.145=.984 |

For the claim score, the overall classification accuracy rate is based only on students whose observed achievement is “below standard” or “above standard.” That is, the overall proportion correct for classifications by claim scores is the sum of the proportions correct in the claim section of the table, divided by the sum of all of the proportions in the “above standard” and “below standard” rows.

The following two sections show classification accuracy statistics for ELA/literacy and mathematics. There are seven tables in each section—one for each grade 3 to 8 and high school (HS). The statistics in these tables were computed as described above.

2.4.1 English Language Arts/Literacy

Results in this section are based on real data. Table 2.15 through Table 2.21 show ELA/literacy classification accuracy for each grade 3 to 8 and high school (HS). Please see the previous section titled “Classification Accuracy” for an explanation of how the statistics in these tables were computed.

| Score | Observed Level | N | P | True L1 | True L2 | True L3 | True L4 | Accuracy by Level | Accuracy Overall |

|---|---|---|---|---|---|---|---|---|---|

| Overall | Level 1 | 181,009 | 0.272 | 0.243 | 0.029 | 0 | 0 | 0.893 | 0.804 |

| Level 2 | 156,391 | 0.235 | 0.032 | 0.17 | 0.034 | 0 | 0.721 | ||

| Level 3 | 151,063 | 0.227 | 0 | 0.037 | 0.158 | 0.032 | 0.694 | ||

| Level 4 | 176,603 | 0.266 | 0 | 0 | 0.031 | 0.234 | 0.883 | ||

| Claim 1 | Below | 180,762 | 0.272 | 0.214 | 0.053 | 0.005 | 0 | 0.979 | 0.981 |

| Near | 301,827 | 0.455 | 0.058 | 0.175 | 0.159 | 0.062 | |||

| Above | 181,175 | 0.273 | 0 | 0.005 | 0.041 | 0.227 | 0.983 | ||

| Claim 2 | Below | 195,674 | 0.295 | 0.249 | 0.04 | 0.005 | 0.001 | 0.979 | 0.978 |

| Near | 329,126 | 0.496 | 0.087 | 0.169 | 0.151 | 0.088 | |||

| Above | 138,964 | 0.209 | 0 | 0.004 | 0.028 | 0.177 | 0.977 | ||

| Claim 3 | Below | 103,706 | 0.156 | 0.134 | 0.015 | 0.005 | 0.002 | 0.957 | 0.964 |

| Near | 410,130 | 0.618 | 0.145 | 0.166 | 0.157 | 0.149 | |||

| Above | 149,928 | 0.226 | 0.001 | 0.006 | 0.024 | 0.195 | 0.97 | ||

| Claim 4 | Below | 179,663 | 0.271 | 0.233 | 0.032 | 0.005 | 0.001 | 0.98 | 0.981 |

| Near | 324,155 | 0.488 | 0.096 | 0.155 | 0.144 | 0.094 | |||

| Above | 159,946 | 0.241 | 0 | 0.004 | 0.029 | 0.208 | 0.982 | ||

| All Students | 665,066 | 1.000 |

| Score | Observed Level | N | P | True L1 | True L2 | True L3 | True L4 | Accuracy by Level | Accuracy Overall |

|---|---|---|---|---|---|---|---|---|---|

| Overall | Level 1 | 198,651 | 0.302 | 0.272 | 0.03 | 0 | 0 | 0.901 | 0.791 |

| Level 2 | 127,847 | 0.194 | 0.034 | 0.125 | 0.035 | 0 | 0.642 | ||

| Level 3 | 152,792 | 0.232 | 0 | 0.039 | 0.156 | 0.037 | 0.67 | ||

| Level 4 | 179,179 | 0.272 | 0 | 0 | 0.033 | 0.239 | 0.877 | ||

| Claim 1 | Below | 182,118 | 0.277 | 0.239 | 0.033 | 0.004 | 0 | 0.983 | 0.983 |

| Near | 301,427 | 0.459 | 0.078 | 0.149 | 0.161 | 0.07 | |||

| Above | 173,823 | 0.264 | 0 | 0.004 | 0.041 | 0.219 | 0.984 | ||

| Claim 2 | Below | 179,734 | 0.273 | 0.242 | 0.026 | 0.005 | 0.001 | 0.979 | 0.975 |

| Near | 333,708 | 0.508 | 0.105 | 0.143 | 0.154 | 0.107 | |||

| Above | 143,926 | 0.219 | 0.001 | 0.006 | 0.027 | 0.185 | 0.97 | ||

| Claim 3 | Below | 110,894 | 0.169 | 0.151 | 0.013 | 0.004 | 0.001 | 0.97 | 0.966 |

| Near | 404,785 | 0.616 | 0.165 | 0.137 | 0.148 | 0.166 | |||

| Above | 141,689 | 0.216 | 0.002 | 0.006 | 0.021 | 0.187 | 0.963 | ||

| Claim 4 | Below | 176,480 | 0.268 | 0.243 | 0.021 | 0.004 | 0.001 | 0.983 | 0.981 |

| Near | 327,873 | 0.499 | 0.119 | 0.131 | 0.141 | 0.107 | |||

| Above | 153,015 | 0.233 | 0.001 | 0.004 | 0.027 | 0.201 | 0.979 | ||

| All Students | 658,469 | 1.000 |

| Score | Observed Level | N | P | True L1 | True L2 | True L3 | True L4 | Accuracy by Level | Accuracy Overall |

|---|---|---|---|---|---|---|---|---|---|

| Overall | Level 1 | 185,701 | 0.270 | 0.244 | 0.026 | 0 | 0 | 0.904 | 0.806 |

| Level 2 | 135,696 | 0.197 | 0.03 | 0.135 | 0.032 | 0 | 0.682 | ||

| Level 3 | 201,050 | 0.292 | 0 | 0.036 | 0.221 | 0.035 | 0.757 | ||

| Level 4 | 164,916 | 0.240 | 0 | 0 | 0.034 | 0.206 | 0.86 | ||

| Claim 1 | Below | 181,722 | 0.265 | 0.221 | 0.039 | 0.005 | 0 | 0.982 | 0.98 |

| Near | 306,887 | 0.447 | 0.06 | 0.151 | 0.192 | 0.044 | |||

| Above | 197,932 | 0.288 | 0 | 0.006 | 0.069 | 0.213 | 0.979 | ||

| Claim 2 | Below | 168,316 | 0.245 | 0.216 | 0.025 | 0.004 | 0 | 0.984 | 0.978 |

| Near | 334,275 | 0.487 | 0.085 | 0.139 | 0.185 | 0.077 | |||

| Above | 183,950 | 0.268 | 0.001 | 0.006 | 0.05 | 0.21 | 0.973 | ||

| Claim 3 | Below | 138,612 | 0.202 | 0.18 | 0.017 | 0.004 | 0.001 | 0.974 | 0.97 |

| Near | 414,532 | 0.604 | 0.156 | 0.141 | 0.182 | 0.125 | |||

| Above | 133,397 | 0.194 | 0.002 | 0.005 | 0.029 | 0.159 | 0.965 | ||

| Claim 4 | Below | 176,000 | 0.256 | 0.225 | 0.027 | 0.004 | 0 | 0.983 | 0.982 |

| Near | 316,734 | 0.461 | 0.087 | 0.14 | 0.179 | 0.055 | |||

| Above | 193,807 | 0.282 | 0.001 | 0.005 | 0.06 | 0.217 | 0.981 | ||

| All Students | 687,363 | 1.000 |

| Score | Observed Level | N | P | True L1 | True L2 | True L3 | True L4 | Accuracy by Level | Accuracy Overall |

|---|---|---|---|---|---|---|---|---|---|

| Overall | Level 1 | 171,712 | 0.249 | 0.223 | 0.026 | 0 | 0 | 0.895 | 0.807 |

| Level 2 | 173,959 | 0.252 | 0.031 | 0.186 | 0.035 | 0 | 0.738 | ||

| Level 3 | 222,093 | 0.322 | 0 | 0.039 | 0.25 | 0.033 | 0.776 | ||

| Level 4 | 121,812 | 0.177 | 0 | 0 | 0.028 | 0.149 | 0.842 | ||

| Claim 1 | Below | 214,670 | 0.312 | 0.253 | 0.054 | 0.005 | 0 | 0.983 | 0.983 |

| Near | 308,591 | 0.449 | 0.054 | 0.171 | 0.189 | 0.035 | |||

| Above | 164,735 | 0.239 | 0 | 0.004 | 0.066 | 0.169 | 0.983 | ||

| Claim 2 | Below | 188,954 | 0.275 | 0.218 | 0.052 | 0.005 | 0 | 0.982 | 0.979 |

| Near | 363,111 | 0.528 | 0.053 | 0.194 | 0.224 | 0.057 | |||

| Above | 135,931 | 0.198 | 0 | 0.005 | 0.052 | 0.141 | 0.975 | ||

| Claim 3 | Below | 132,320 | 0.192 | 0.166 | 0.021 | 0.004 | 0.001 | 0.975 | 0.96 |

| Near | 435,894 | 0.634 | 0.12 | 0.175 | 0.205 | 0.133 | |||

| Above | 119,782 | 0.174 | 0.003 | 0.007 | 0.027 | 0.137 | 0.943 | ||

| Claim 4 | Below | 156,579 | 0.228 | 0.196 | 0.027 | 0.004 | 0 | 0.982 | 0.981 |

| Near | 342,591 | 0.498 | 0.087 | 0.154 | 0.189 | 0.068 | |||

| Above | 188,826 | 0.274 | 0 | 0.005 | 0.065 | 0.204 | 0.979 | ||

| All Students | 689,576 | 1.000 |

| Score | Observed Level | N | P | True L1 | True L2 | True L3 | True L4 | Accuracy by Level | Accuracy Overall |

|---|---|---|---|---|---|---|---|---|---|

| Overall | Level 1 | 173,096 | 0.249 | 0.225 | 0.025 | 0 | 0 | 0.901 | 0.813 |

| Level 2 | 154,165 | 0.222 | 0.029 | 0.16 | 0.034 | 0 | 0.719 | ||

| Level 3 | 240,281 | 0.346 | 0 | 0.039 | 0.275 | 0.032 | 0.793 | ||

| Level 4 | 126,737 | 0.183 | 0 | 0 | 0.028 | 0.154 | 0.844 | ||

| Claim 1 | Below | 213,243 | 0.308 | 0.249 | 0.054 | 0.005 | 0 | 0.984 | 0.983 |

| Near | 307,401 | 0.444 | 0.047 | 0.169 | 0.2 | 0.028 | |||

| Above | 171,646 | 0.248 | 0 | 0.004 | 0.074 | 0.169 | 0.982 | ||

| Claim 2 | Below | 157,253 | 0.227 | 0.19 | 0.032 | 0.005 | 0 | 0.978 | 0.977 |

| Near | 337,089 | 0.487 | 0.057 | 0.164 | 0.221 | 0.044 | |||

| Above | 197,948 | 0.286 | 0.001 | 0.006 | 0.087 | 0.192 | 0.976 | ||

| Claim 3 | Below | 139,399 | 0.201 | 0.175 | 0.021 | 0.005 | 0.001 | 0.975 | 0.964 |

| Near | 451,204 | 0.652 | 0.14 | 0.168 | 0.213 | 0.131 | |||

| Above | 101,687 | 0.147 | 0.002 | 0.006 | 0.025 | 0.114 | 0.948 | ||

| Claim 4 | Below | 159,538 | 0.230 | 0.205 | 0.022 | 0.003 | 0 | 0.983 | 0.981 |

| Near | 334,307 | 0.483 | 0.089 | 0.139 | 0.196 | 0.059 | |||

| Above | 198,445 | 0.287 | 0.001 | 0.006 | 0.077 | 0.204 | 0.979 | ||

| All Students | 694,279 | 1.000 |

| Score | Observed Level | N | P | True L1 | True L2 | True L3 | True L4 | Accuracy by Level | Accuracy Overall |

|---|---|---|---|---|---|---|---|---|---|

| Overall | Level 1 | 165,438 | 0.244 | 0.217 | 0.027 | 0 | 0 | 0.89 | 0.814 |

| Level 2 | 168,637 | 0.248 | 0.031 | 0.184 | 0.033 | 0 | 0.741 | ||

| Level 3 | 228,936 | 0.337 | 0 | 0.036 | 0.27 | 0.032 | 0.8 | ||

| Level 4 | 116,241 | 0.171 | 0 | 0 | 0.028 | 0.143 | 0.837 | ||

| Claim 1 | Below | 200,889 | 0.297 | 0.235 | 0.055 | 0.006 | 0 | 0.979 | 0.982 |

| Near | 297,688 | 0.440 | 0.05 | 0.172 | 0.195 | 0.022 | |||

| Above | 178,651 | 0.264 | 0 | 0.004 | 0.09 | 0.169 | 0.984 | ||

| Claim 2 | Below | 163,228 | 0.241 | 0.194 | 0.042 | 0.004 | 0 | 0.981 | 0.978 |

| Near | 347,872 | 0.514 | 0.057 | 0.182 | 0.223 | 0.051 | |||

| Above | 166,128 | 0.245 | 0 | 0.005 | 0.067 | 0.173 | 0.976 | ||

| Claim 3 | Below | 128,884 | 0.190 | 0.159 | 0.025 | 0.005 | 0.001 | 0.97 | 0.964 |

| Near | 430,407 | 0.636 | 0.116 | 0.187 | 0.227 | 0.105 | |||

| Above | 117,937 | 0.174 | 0.002 | 0.006 | 0.035 | 0.132 | 0.957 | ||

| Claim 4 | Below | 168,303 | 0.249 | 0.208 | 0.036 | 0.005 | 0 | 0.98 | 0.98 |

| Near | 325,857 | 0.481 | 0.076 | 0.161 | 0.2 | 0.043 | |||

| Above | 183,068 | 0.270 | 0 | 0.005 | 0.08 | 0.185 | 0.98 | ||

| All Students | 679,252 | 1.000 |

| Score | Observed Level | N | P | True L1 | True L2 | True L3 | True L4 | Accuracy by Level | Accuracy Overall |

|---|---|---|---|---|---|---|---|---|---|

| Overall | Level 1 | 124,249 | 0.200 | 0.159 | 0.025 | 0.008 | 0.007 | 0.799 | 0.736 |

| Level 2 | 129,611 | 0.208 | 0.027 | 0.138 | 0.035 | 0.008 | 0.662 | ||

| Level 3 | 194,592 | 0.313 | 0.009 | 0.041 | 0.22 | 0.043 | 0.703 | ||

| Level 4 | 173,770 | 0.279 | 0.007 | 0.009 | 0.045 | 0.219 | 0.785 | ||

| Claim 1 | Below | 153,051 | 0.247 | 0.174 | 0.051 | 0.013 | 0.009 | 0.911 | 0.917 |

| Near | 263,820 | 0.426 | 0.049 | 0.158 | 0.174 | 0.045 | |||

| Above | 202,687 | 0.327 | 0.01 | 0.015 | 0.089 | 0.212 | 0.921 | ||

| Claim 2 | Below | 118,166 | 0.191 | 0.142 | 0.031 | 0.01 | 0.008 | 0.904 | 0.922 |

| Near | 274,595 | 0.443 | 0.057 | 0.144 | 0.176 | 0.066 | |||

| Above | 226,797 | 0.366 | 0.01 | 0.016 | 0.083 | 0.258 | 0.931 | ||

| Claim 3 | Below | 93,195 | 0.150 | 0.114 | 0.021 | 0.009 | 0.007 | 0.897 | 0.901 |

| Near | 375,353 | 0.606 | 0.126 | 0.164 | 0.188 | 0.128 | |||

| Above | 151,010 | 0.244 | 0.01 | 0.014 | 0.045 | 0.175 | 0.904 | ||

| Claim 4 | Below | 123,059 | 0.199 | 0.147 | 0.031 | 0.011 | 0.009 | 0.9 | 0.915 |

| Near | 292,740 | 0.472 | 0.077 | 0.143 | 0.175 | 0.078 | |||

| Above | 203,759 | 0.329 | 0.011 | 0.014 | 0.074 | 0.23 | 0.924 | ||

| All Students | 622,222 | 1.000 |

2.4.2 Mathematics

Results in this section are based on real data. Table 2.22 through Table 2.28 show the classification accuracy of the mathematics assessment for each grade 3 to 8 and high school (HS). Please see the previous section titled “Classification Accuracy” for an explanation of how the statistics in these tables were computed.

| Score | Observed Level | N | P | True L1 | True L2 | True L3 | True L4 | Accuracy by Level | Accuracy Overall |

|---|---|---|---|---|---|---|---|---|---|

| Overall | Level 1 | 173,603 | 0.261 | 0.235 | 0.026 | 0 | 0 | 0.902 | 0.836 |

| Level 2 | 152,860 | 0.229 | 0.029 | 0.171 | 0.03 | 0 | 0.744 | ||

| Level 3 | 187,937 | 0.282 | 0 | 0.032 | 0.225 | 0.025 | 0.798 | ||

| Level 4 | 151,857 | 0.228 | 0 | 0 | 0.023 | 0.205 | 0.898 | ||

| Claim 1 | Below | 198,780 | 0.316 | 0.243 | 0.07 | 0.003 | 0 | 0.991 | 0.991 |

| Near | 206,969 | 0.329 | 0.018 | 0.149 | 0.153 | 0.008 | |||

| Above | 223,731 | 0.355 | 0 | 0.003 | 0.099 | 0.253 | 0.991 | ||

| Claim 2/4 | Below | 165,469 | 0.263 | 0.211 | 0.042 | 0.008 | 0.002 | 0.961 | 0.973 |

| Near | 284,722 | 0.452 | 0.071 | 0.166 | 0.183 | 0.032 | |||

| Above | 179,289 | 0.285 | 0 | 0.004 | 0.075 | 0.206 | 0.984 | ||

| Claim 3 | Below | 152,892 | 0.243 | 0.205 | 0.031 | 0.006 | 0.001 | 0.974 | 0.981 |

| Near | 292,303 | 0.464 | 0.089 | 0.163 | 0.178 | 0.034 | |||

| Above | 184,285 | 0.293 | 0 | 0.004 | 0.072 | 0.217 | 0.987 | ||

| All Students | 666,257 | 1.000 |

| Score | Observed Level | N | P | True L1 | True L2 | True L3 | True L4 | Accuracy by Level | Accuracy Overall |

|---|---|---|---|---|---|---|---|---|---|

| Overall | Level 1 | 156,083 | 0.236 | 0.211 | 0.025 | 0 | 0 | 0.894 | 0.841 |

| Level 2 | 199,714 | 0.302 | 0.028 | 0.244 | 0.031 | 0 | 0.806 | ||

| Level 3 | 171,263 | 0.259 | 0 | 0.031 | 0.205 | 0.023 | 0.791 | ||

| Level 4 | 133,555 | 0.202 | 0 | 0 | 0.021 | 0.181 | 0.895 | ||

| Claim 1 | Below | 227,939 | 0.366 | 0.234 | 0.128 | 0.003 | 0 | 0.992 | 0.991 |

| Near | 198,379 | 0.318 | 0.004 | 0.157 | 0.149 | 0.008 | |||

| Above | 196,944 | 0.316 | 0 | 0.003 | 0.095 | 0.218 | 0.991 | ||

| Claim 2/4 | Below | 192,713 | 0.309 | 0.232 | 0.07 | 0.006 | 0.001 | 0.977 | 0.979 |

| Near | 282,907 | 0.454 | 0.04 | 0.199 | 0.176 | 0.039 | |||

| Above | 147,642 | 0.237 | 0 | 0.004 | 0.056 | 0.177 | 0.983 | ||

| Claim 3 | Below | 184,074 | 0.295 | 0.219 | 0.069 | 0.007 | 0.001 | 0.974 | |

| Near | 278,811 | 0.447 | 0.045 | 0.198 | 0.172 | 0.032 | |||

| Above | 160,377 | 0.257 | 0 | 0.004 | 0.063 | 0.191 | 0.985 | ||

| All Students | 660,615 | 1.000 |

| Score | Observed Level | N | P | True L1 | True L2 | True L3 | True L4 | Accuracy by Level | Accuracy Overall |

|---|---|---|---|---|---|---|---|---|---|

| Overall | Level 1 | 231,644 | 0.336 | 0.303 | 0.033 | 0 | 0 | 0.901 | 0.837 |

| Level 2 | 185,346 | 0.269 | 0.031 | 0.209 | 0.028 | 0 | 0.777 | ||

| Level 3 | 121,771 | 0.177 | 0 | 0.027 | 0.127 | 0.023 | 0.721 | ||

| Level 4 | 150,759 | 0.219 | 0 | 0 | 0.021 | 0.198 | 0.905 | ||

| Claim 1 | Below | 274,832 | 0.422 | 0.316 | 0.103 | 0.004 | 0 | 0.991 | 0.991 |

| Near | 195,538 | 0.301 | 0.011 | 0.15 | 0.119 | 0.021 | |||

| Above | 180,177 | 0.277 | 0 | 0.003 | 0.05 | 0.224 | 0.99 | ||

| Claim 2/4 | Below | 229,227 | 0.352 | 0.278 | 0.063 | 0.008 | 0.004 | 0.966 | 0.973 |

| Near | 283,869 | 0.436 | 0.064 | 0.187 | 0.135 | 0.051 | |||

| Above | 137,451 | 0.211 | 0 | 0.003 | 0.033 | 0.175 | 0.985 | ||

| Claim 3 | Below | 223,860 | 0.344 | 0.281 | 0.055 | 0.007 | 0.002 | 0.974 | 0.977 |

| Near | 297,583 | 0.457 | 0.074 | 0.184 | 0.132 | 0.067 | |||

| Above | 129,104 | 0.198 | 0 | 0.003 | 0.026 | 0.169 | 0.982 | ||

| All Students | 689,520 | 1.000 |

| Score | Observed Level | N | P | True L1 | True L2 | True L3 | True L4 | Accuracy by Level | Accuracy Overall |

|---|---|---|---|---|---|---|---|---|---|

| Overall | Level 1 | 226,855 | 0.329 | 0.3 | 0.028 | 0 | 0 | 0.914 | 0.837 |

| Level 2 | 191,656 | 0.278 | 0.03 | 0.217 | 0.031 | 0 | 0.781 | ||

| Level 3 | 133,752 | 0.194 | 0 | 0.029 | 0.141 | 0.024 | 0.726 | ||

| Level 4 | 137,611 | 0.199 | 0 | 0 | 0.02 | 0.179 | 0.897 | ||

| Claim 1 | Below | 270,798 | 0.417 | 0.314 | 0.099 | 0.003 | 0 | 0.992 | 0.991 |

| Near | 207,573 | 0.319 | 0.009 | 0.154 | 0.133 | 0.024 | |||

| Above | 171,666 | 0.264 | 0 | 0.003 | 0.052 | 0.209 | 0.989 | ||

| Claim 2/4 | Below | 238,810 | 0.367 | 0.293 | 0.065 | 0.008 | 0.002 | 0.973 | 0.977 |

| Near | 281,424 | 0.433 | 0.052 | 0.191 | 0.144 | 0.046 | |||

| Above | 129,803 | 0.200 | 0 | 0.003 | 0.034 | 0.162 | 0.985 | ||

| Claim 3 | Below | 228,819 | 0.352 | 0.287 | 0.057 | 0.007 | 0.001 | 0.977 | 0.98 |

| Near | 286,945 | 0.441 | 0.069 | 0.179 | 0.134 | 0.059 | |||

| Above | 134,273 | 0.207 | 0 | 0.003 | 0.03 | 0.173 | 0.985 | ||

| All Students | 689,874 | 1.000 |

| Score | Observed Level | N | P | True L1 | True L2 | True L3 | True L4 | Accuracy by Level | Accuracy Overall |

|---|---|---|---|---|---|---|---|---|---|

| Overall | Level 1 | 240,655 | 0.345 | 0.314 | 0.031 | 0 | 0 | 0.909 | 0.839 |

| Level 2 | 181,489 | 0.260 | 0.032 | 0.199 | 0.03 | 0 | 0.763 | ||

| Level 3 | 137,383 | 0.197 | 0 | 0.027 | 0.148 | 0.022 | 0.751 | ||

| Level 4 | 137,184 | 0.197 | 0 | 0 | 0.018 | 0.179 | 0.907 | ||

| Claim 1 | Below | 281,281 | 0.427 | 0.329 | 0.095 | 0.003 | 0 | 0.992 | 0.992 |

| Near | 197,865 | 0.301 | 0.011 | 0.15 | 0.127 | 0.013 | |||

| Above | 178,891 | 0.272 | 0 | 0.002 | 0.064 | 0.206 | 0.991 | ||

| Claim 2/4 | Below | 224,467 | 0.341 | 0.279 | 0.051 | 0.009 | 0.003 | 0.966 | 0.975 |

| Near | 288,789 | 0.439 | 0.082 | 0.176 | 0.139 | 0.042 | |||

| Above | 144,781 | 0.220 | 0 | 0.003 | 0.04 | 0.177 | 0.987 | ||

| Claim 3 | Below | 178,804 | 0.272 | 0.229 | 0.034 | 0.007 | 0.002 | 0.969 | |

| Near | 346,780 | 0.527 | 0.124 | 0.186 | 0.151 | 0.066 | |||

| Above | 132,453 | 0.201 | 0 | 0.003 | 0.031 | 0.167 | 0.984 | ||

| All Students | 696,711 | 1.000 |

| Score | Observed Level | N | P | True L1 | True L2 | True L3 | True L4 | Accuracy by Level | Accuracy Overall |

|---|---|---|---|---|---|---|---|---|---|

| Overall | Level 1 | 265,160 | 0.391 | 0.356 | 0.035 | 0 | 0 | 0.911 | 0.838 |

| Level 2 | 158,000 | 0.233 | 0.033 | 0.171 | 0.029 | 0 | 0.733 | ||

| Level 3 | 113,539 | 0.167 | 0 | 0.025 | 0.121 | 0.021 | 0.721 | ||

| Level 4 | 141,770 | 0.209 | 0 | 0 | 0.018 | 0.191 | 0.913 | ||

| Claim 1 | Below | 280,445 | 0.438 | 0.361 | 0.073 | 0.004 | 0 | 0.992 | 0.992 |

| Near | 193,749 | 0.302 | 0.023 | 0.143 | 0.116 | 0.02 | |||

| Above | 166,511 | 0.260 | 0 | 0.002 | 0.045 | 0.213 | 0.992 | ||

| Claim 2/4 | Below | 239,096 | 0.373 | 0.317 | 0.047 | 0.007 | 0.002 | 0.975 | 0.98 |

| Near | 255,332 | 0.399 | 0.082 | 0.153 | 0.124 | 0.04 | |||

| Above | 146,277 | 0.228 | 0 | 0.002 | 0.038 | 0.188 | 0.989 | ||

| Claim 3 | Below | 200,490 | 0.313 | 0.268 | 0.037 | 0.006 | 0.001 | 0.976 | |

| Near | 304,743 | 0.476 | 0.114 | 0.165 | 0.129 | 0.068 | |||

| Above | 135,472 | 0.211 | 0 | 0.003 | 0.029 | 0.18 | 0.987 | ||

| All Students | 678,469 | 1.000 |

| Score | Observed Level | N | P | True L1 | True L2 | True L3 | True L4 | Accuracy by Level | Accuracy Overall |

|---|---|---|---|---|---|---|---|---|---|

| Overall | Level 1 | 277,348 | 0.437 | 0.375 | 0.044 | 0.012 | 0.007 | 0.857 | 0.769 |

| Level 2 | 151,815 | 0.239 | 0.047 | 0.158 | 0.03 | 0.005 | 0.66 | ||

| Level 3 | 118,918 | 0.187 | 0.012 | 0.032 | 0.129 | 0.015 | 0.687 | ||

| Level 4 | 86,277 | 0.136 | 0.007 | 0.004 | 0.018 | 0.107 | 0.788 | ||

| Claim 1 | Below | 329,036 | 0.520 | 0.396 | 0.098 | 0.017 | 0.009 | 0.951 | 0.938 |

| Near | 168,885 | 0.267 | 0.031 | 0.13 | 0.098 | 0.008 | |||

| Above | 134,996 | 0.213 | 0.011 | 0.009 | 0.07 | 0.124 | 0.907 | ||

| Claim 2/4 | Below | 220,128 | 0.348 | 0.278 | 0.043 | 0.018 | 0.009 | 0.922 | 0.916 |

| Near | 303,596 | 0.480 | 0.145 | 0.156 | 0.137 | 0.042 | |||

| Above | 109,193 | 0.173 | 0.01 | 0.007 | 0.04 | 0.116 | 0.903 | ||

| Claim 3 | Below | 188,257 | 0.297 | 0.239 | 0.036 | 0.015 | 0.008 | 0.924 | 0.919 |

| Near | 337,026 | 0.532 | 0.173 | 0.162 | 0.145 | 0.053 | |||

| Above | 107,634 | 0.170 | 0.008 | 0.007 | 0.037 | 0.118 | 0.911 | ||

| All Students | 634,358 | 1.000 |

2.5 Standard Errors of Measurement (SEMs)

The SEM information in this section is based on student measures and associated SEMs included in the data Smarter Balanced receives from members after the administration. Student measures and SEMs are not computed directly by Smarter Balanced. They are assumed to be computed by the test delivery vendors according to the scoring specifications provided by Smarter Balanced. These include the use of equation (2.6) in this chapter for computing SEMs. According to this equation, and the adaptive nature of the test, different students receive different items. The amount of measurement error will therefore vary from student to student, even among students with the same estimate of achievement.

All of the SEM statistics reported in this chapter are in the reporting scale metric. For member data that includes SEMs in the theta metric exclusively, the SEMs are transformed to the reporting metric using the multiplication factors in the theta-to-scale-score transformation given in Chapter 5.

Table 2.29 shows the trend in the SEM by student decile. Deciles were defined by ranking students from highest to lowest scale score and dividing the students into 10 equal-sized groups according to rank. Decile 1 contains the 10% of students with the lowest scale scores. Decile 10 contains the 10% of students with the highest scale scores. The standard error of measurement (SEM) reported for a decile in Table 2.29 is the average SEM among examinees at that decile.

| Subject | Grade | Mean SEM | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| ELA/L | 3 | 24.5 | 32.1 | 25.9 | 24.2 | 23.2 | 22.4 | 22.4 | 22.5 | 23.1 | 23.4 | 25.5 |

| 4 | 26.8 | 32.1 | 27.3 | 26.4 | 26.0 | 25.7 | 25.1 | 25.1 | 25.1 | 25.6 | 29.0 | |

| 5 | 25.9 | 30.8 | 25.4 | 24.1 | 24.1 | 24.1 | 24.2 | 24.7 | 25.4 | 26.5 | 29.9 | |

| 6 | 26.2 | 32.8 | 27.1 | 25.3 | 24.5 | 24.4 | 24.5 | 24.6 | 25.2 | 25.7 | 28.2 | |

| 7 | 27.6 | 34.1 | 28.6 | 26.7 | 25.9 | 25.4 | 25.4 | 25.6 | 26.3 | 27.3 | 30.6 | |

| 8 | 27.7 | 34.7 | 28.2 | 26.6 | 26.3 | 25.7 | 25.5 | 25.7 | 26.4 | 27.2 | 30.5 | |

| HS | 31.3 | 40.8 | 33.2 | 30.5 | 29.1 | 28.5 | 28.5 | 28.6 | 29.4 | 30.5 | 33.9 | |

| MATH | 3 | 18.6 | 25.4 | 20.3 | 18.6 | 17.9 | 17.4 | 17.0 | 16.7 | 16.2 | 17.0 | 19.3 |

| 4 | 19.2 | 28.3 | 20.9 | 19.1 | 18.3 | 17.8 | 17.2 | 16.9 | 16.8 | 16.9 | 20.1 | |

| 5 | 22.3 | 34.7 | 27.6 | 25.1 | 22.8 | 20.8 | 19.2 | 18.2 | 17.8 | 17.2 | 20.0 | |

| 6 | 24.8 | 44.0 | 29.9 | 25.7 | 23.4 | 22.1 | 21.1 | 20.4 | 19.8 | 19.3 | 22.4 | |

| 7 | 27.2 | 47.2 | 33.4 | 29.4 | 27.1 | 25.4 | 23.8 | 22.2 | 20.9 | 20.0 | 21.8 | |

| 8 | 29.5 | 48.1 | 36.7 | 33.0 | 29.8 | 27.9 | 27.2 | 25.0 | 22.4 | 21.0 | 23.5 | |

| HS | 32.9 | 59.6 | 43.3 | 37.4 | 33.4 | 30.5 | 28.4 | 26.3 | 24.4 | 22.4 | 22.9 |

Table 2.30 and Table 2.31 show the average SEM near the achievement level cut scores. The average SEM reported for a given cut score is the average SEM among students within 10 scale score units of the cut score. In the column headings, “Cut1” is the lowest cut score defining the lower boundary of level 2; “Cut2” defines the lower boundary of level 3, and “Cut3” defines the lower boundary of level 4.

| Grade | Cut1 N | Cut1 Mn | Cut1 SD | Cut2 N | Cut2 Mn | Cut2 SD | Cut3 N | Cut3 Mn | Cut3 SD |

|---|---|---|---|---|---|---|---|---|---|

| 3 | 44,694 | 23.92 | 1.13 | 55,714 | 22.36 | 1.15 | 49,021 | 23.10 | 1.03 |

| 4 | 42,374 | 25.98 | 1.24 | 52,607 | 25.31 | 1.28 | 49,580 | 25.12 | 1.28 |

| 5 | 42,261 | 24.05 | 1.10 | 51,487 | 24.15 | 1.02 | 50,481 | 25.42 | 1.33 |

| 6 | 40,992 | 25.30 | 1.14 | 55,282 | 24.47 | 1.37 | 44,781 | 25.62 | 1.51 |

| 7 | 36,474 | 26.66 | 1.16 | 52,981 | 25.39 | 1.40 | 41,836 | 26.76 | 1.53 |

| 8 | 38,982 | 26.51 | 1.26 | 47,378 | 25.48 | 1.21 | 40,773 | 26.96 | 1.24 |

| HS | 25,148 | 31.50 | 1.30 | 36,764 | 28.45 | 1.19 | 40,933 | 29.19 | 1.27 |

| Grade | Cut1 N | Cut1 Mn | Cut1 SD | Cut2 N | Cut2 Mn | Cut2 SD | Cut3 N | Cut3 Mn | Cut3 SD |

|---|---|---|---|---|---|---|---|---|---|

| 3 | 51,086 | 18.54 | 1.05 | 63,445 | 17.09 | 0.87 | 50,522 | 16.23 | 0.87 |

| 4 | 47,773 | 19.25 | 1.07 | 62,451 | 17.27 | 1.02 | 46,245 | 16.84 | 0.89 |

| 5 | 50,924 | 23.15 | 1.23 | 53,499 | 18.62 | 1.20 | 45,178 | 17.64 | 1.11 |

| 6 | 44,490 | 23.75 | 1.08 | 52,876 | 20.87 | 0.97 | 41,221 | 19.46 | 1.08 |

| 7 | 42,561 | 27.20 | 1.33 | 45,438 | 22.87 | 1.32 | 35,523 | 20.35 | 1.24 |

| 8 | 42,185 | 28.67 | 1.86 | 38,234 | 25.73 | 1.19 | 31,903 | 21.52 | 1.17 |

| HS | 38,021 | 30.72 | 1.84 | 34,627 | 25.74 | 1.40 | 20,860 | 22.01 | 1.37 |

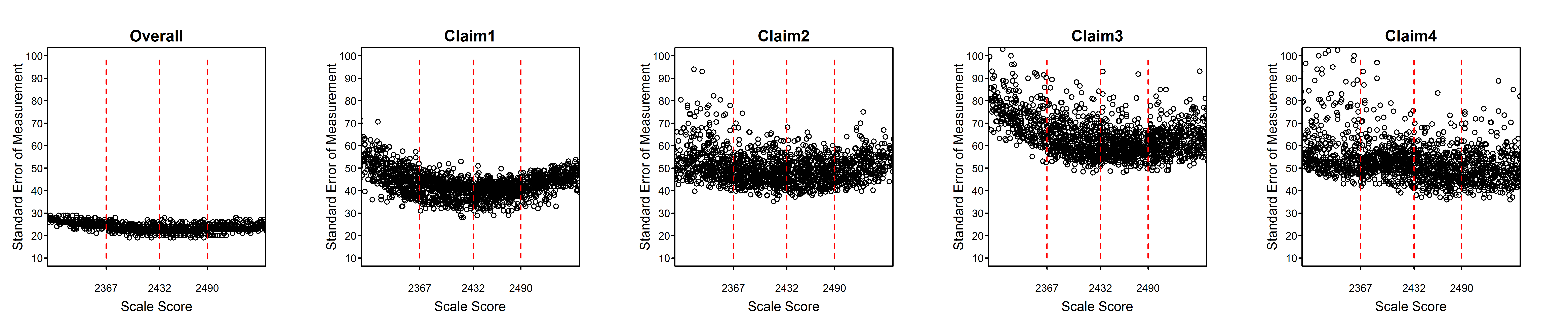

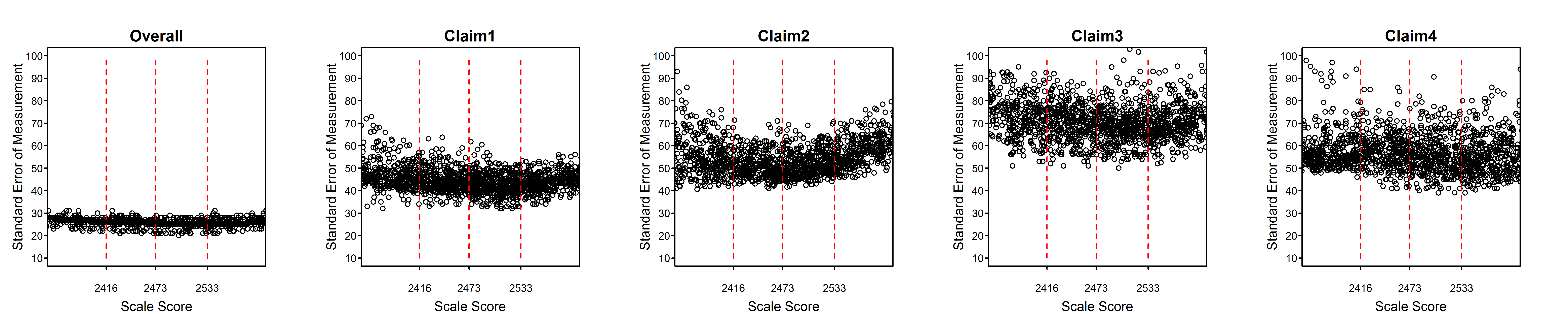

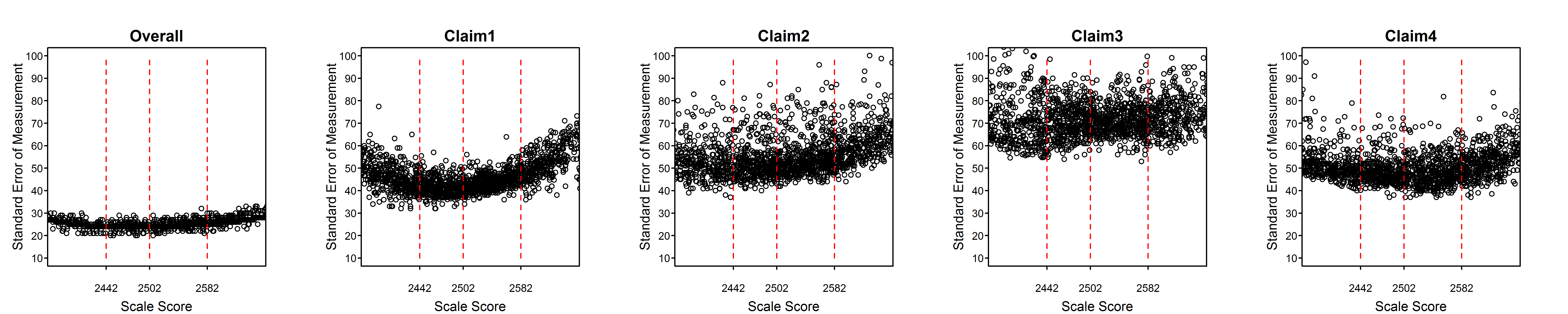

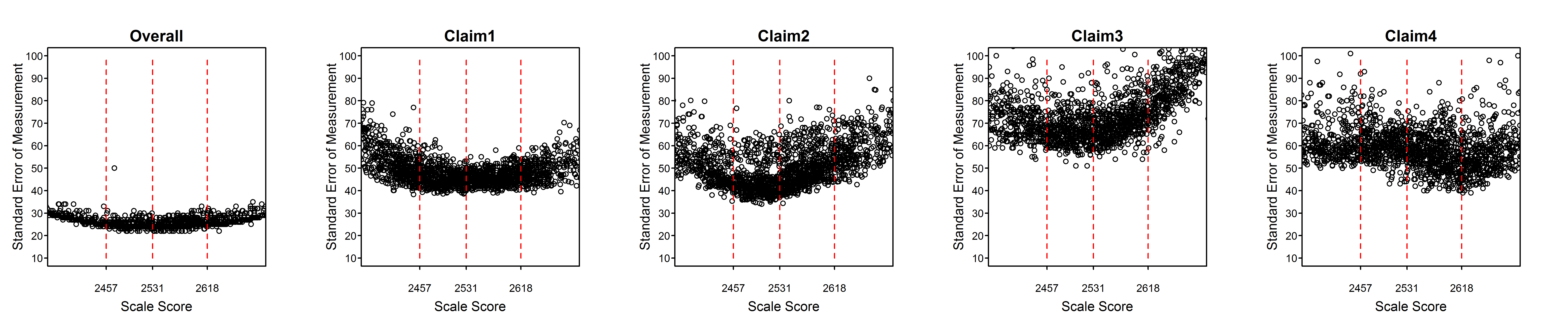

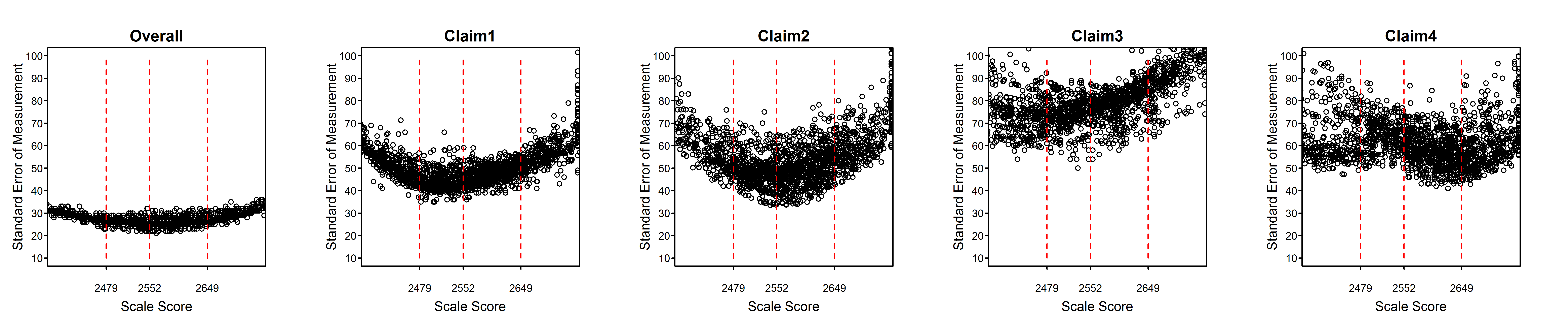

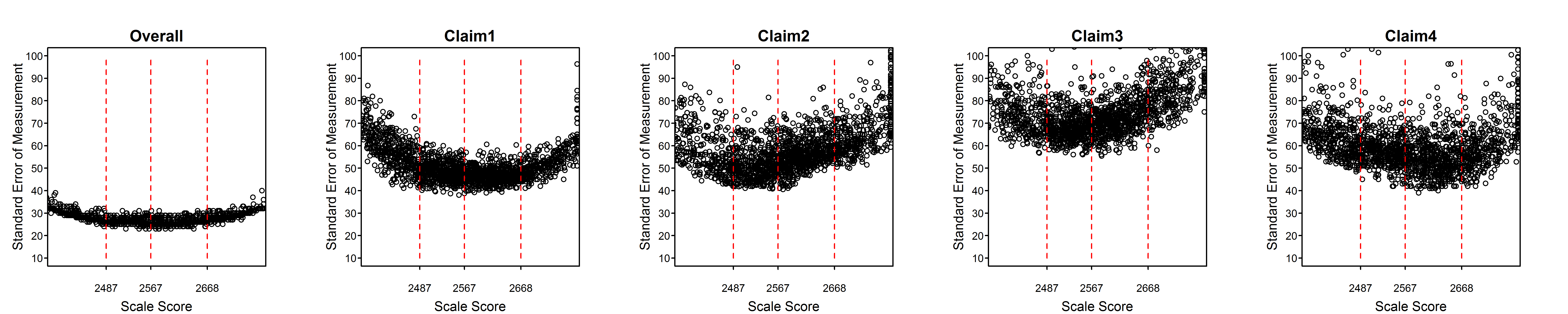

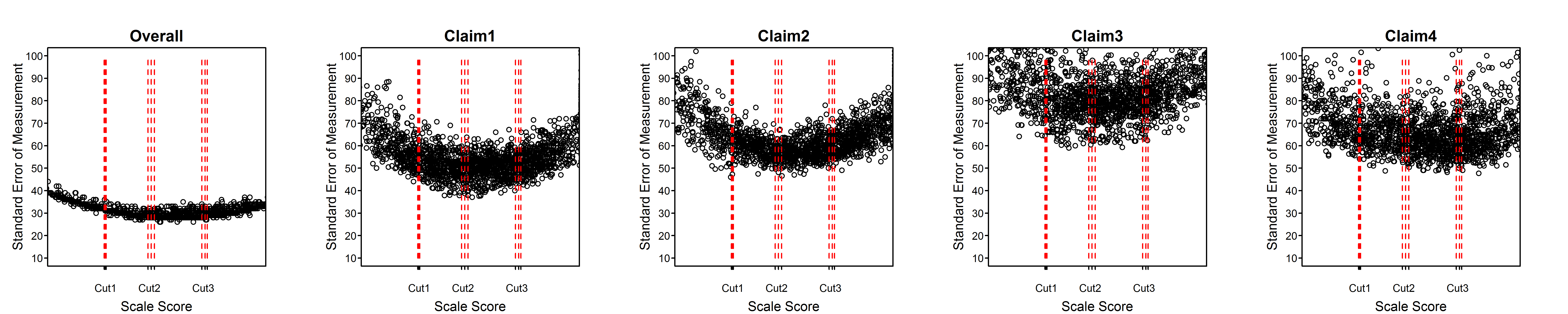

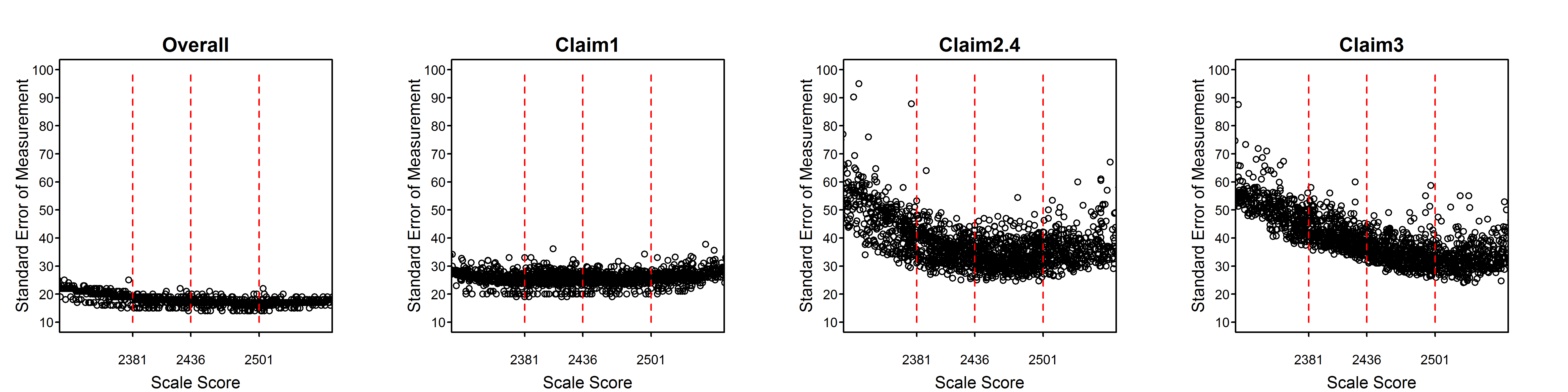

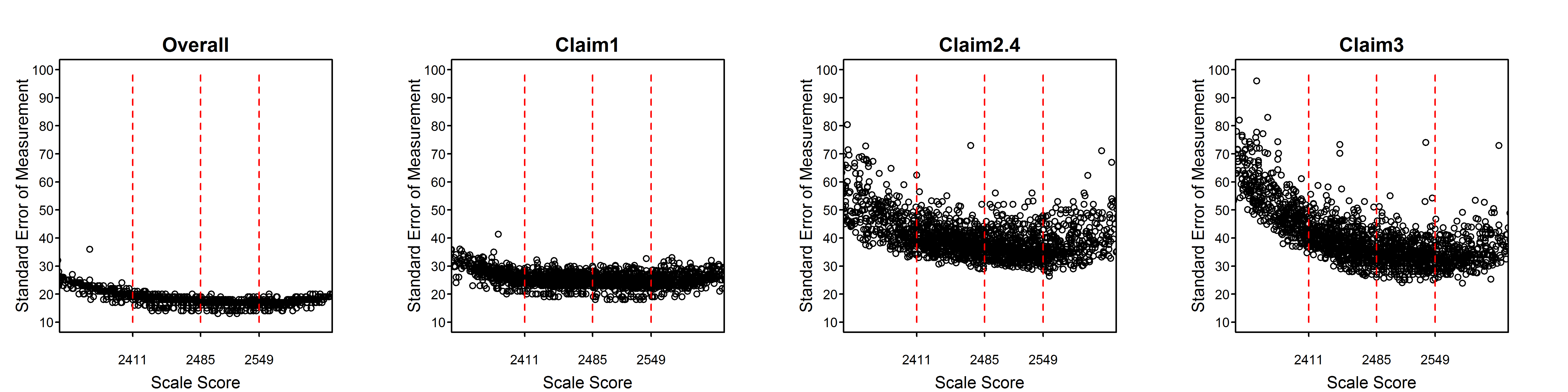

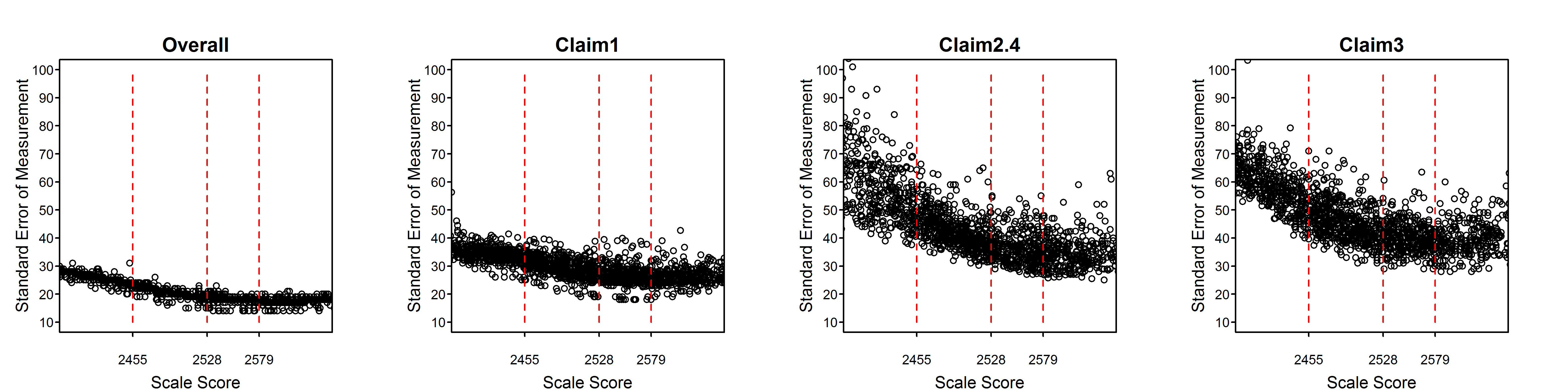

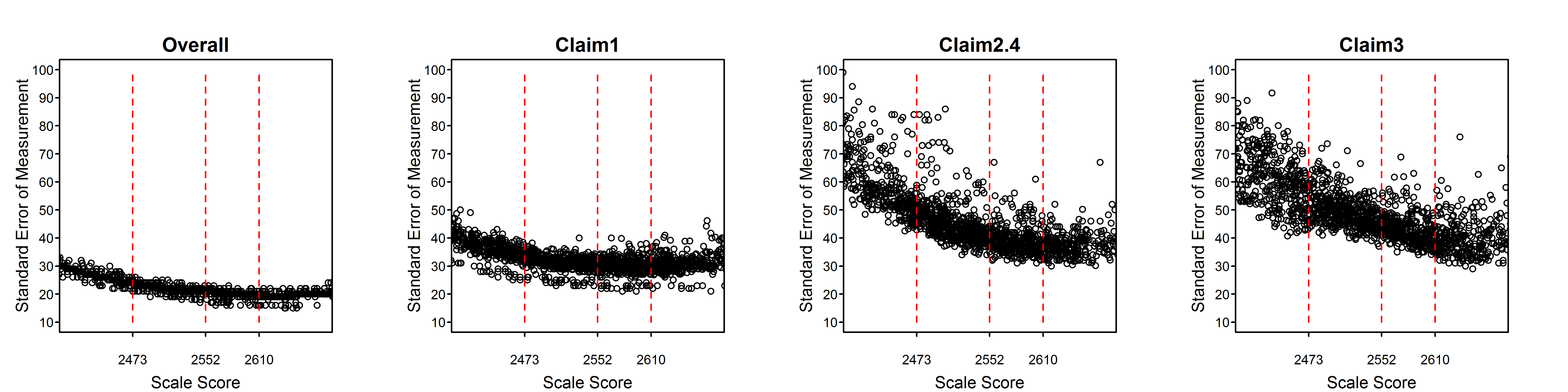

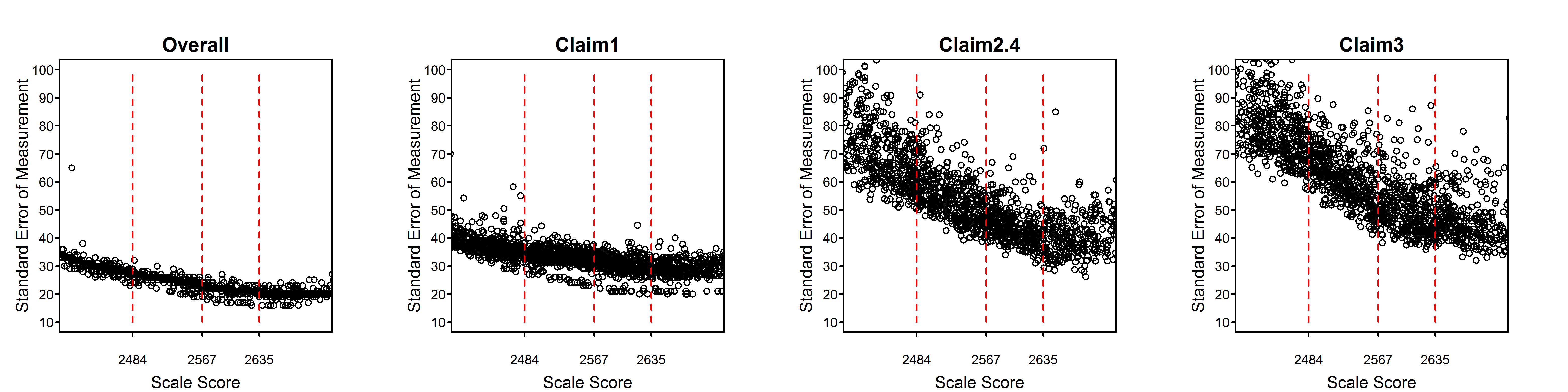

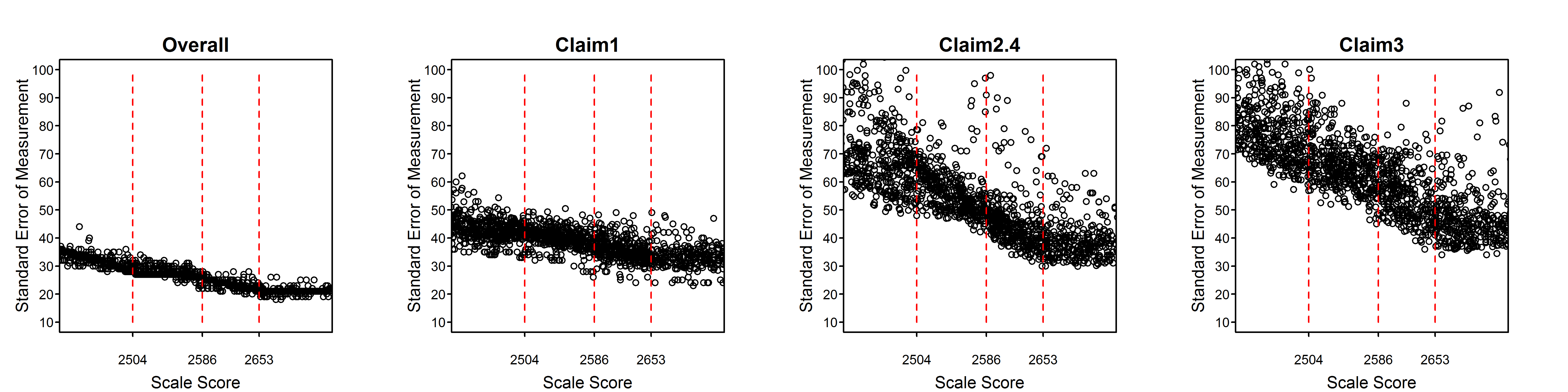

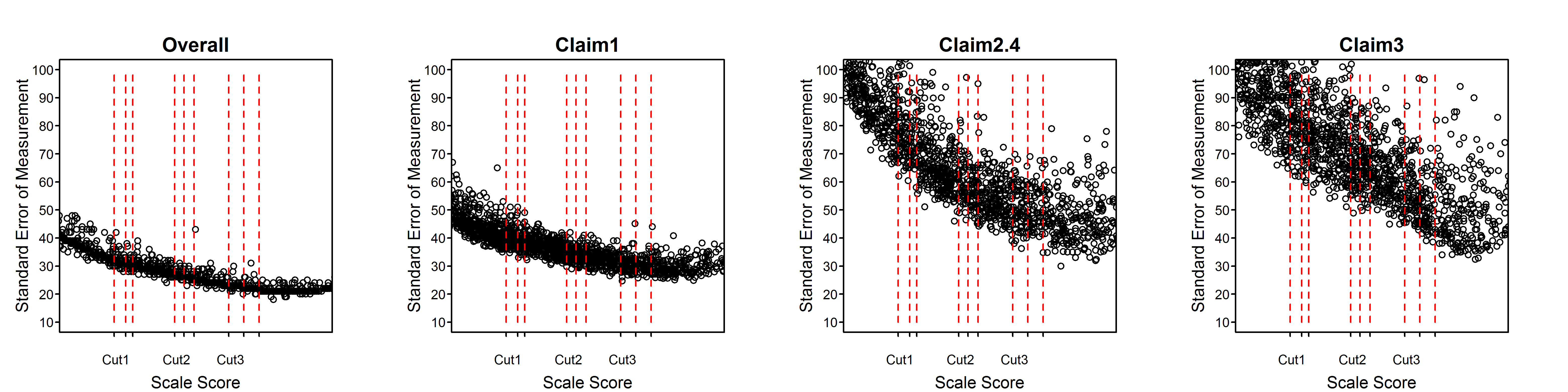

Figure 2.2 to Figure 2.15 are scatter plots of individual student SEMs as a function of scale score for the total test and claims/subscores by grade within subject. These plots show the variability of SEMs among students with the same scale score as well as the trend in SEM with student achievement (scale score). In comparison to the total score, a claim score has greater measurement error and variability among students due to the fact that the claim score is based on a smaller number of items. Among claims, those representing fewer items will have higher measurement error and greater variability of measurement error than those representing more items.

Dashed vertical lines in Figure 2.2 to Figure 2.15 represent the achievement level cut scores. The plots for the high school standard errors show cut scores for each grade 9, 10, and 11, separately.

Figure 2.2: Students’ Standard Error of Measurement by Scale Score, ELA/Literacy Grade 3

Figure 2.3: Students’ Standard Error of Measurement by Scale Score, ELA/Literacy Grade 4

Figure 2.4: Students’ Standard Error of Measurement by Scale Score, ELA/Literacy Grade 5

Figure 2.5: Students’ Standard Error of Measurement by Scale Score, ELA/Literacy Grade 6

Figure 2.6: Students’ Standard Error of Measurement by Scale Score, ELA/Literacy Grade 7

Figure 2.7: Students’ Standard Error of Measurement by Scale Score, ELA/Literacy Grade 8

Figure 2.8: Students’ Standard Error of Measurement by Scale Score, ELA/Literacy High School

Figure 2.9: Students’ Standard Error of Measurement by Scale Score, Mathematics Grade 3

Figure 2.10: Students’ Standard Error of Measurement by Scale Score, Mathematics Grade 4

Figure 2.11: Students’ Standard Error of Measurement by Scale Score, Mathematics Grade 5

Figure 2.12: Students’ Standard Error of Measurement by Scale Score, Mathematics Grade 6

Figure 2.13: Students’ Standard Error of Measurement by Scale Score, Mathematics Grade 7

Figure 2.14: Students’ Standard Error of Measurement by Scale Score, Mathematics Grade 8

Figure 2.15: Students’ Standard Error of Measurement by Scale Score, Mathematics High School

All of the tables and figures in this section, for every grade and subject, show a trend of higher measurement error for lower-achieving students. This trend reflects the fact that the item pool is difficult in comparison to overall student achievement. The CAT algorithm still delivers easier items to lower-achieving students than they would typically receive in a non-adaptive test, or in a fixed form where difficulty is similar to that of the item pool as a whole. But low-achieving students still tend to receive items that are relatively more difficult for them. Typically, this is because the CAT algorithm does not have easier items available within the blueprint constraints that must be met for all students.

The reason for the appearance of two separate sets of trends, differing mainly in the vertical dimension, in some plots such as the grade 6, claim 4, ELA plot and the overall and claim 1 plots for grades 3 through 6 in mathematics, will be investigated. It is possible that some member jurisdictions use a different formula for the SEM than other members and that this formula does not conform to equation (2.6) in this chapter. In the future, Smarter Balanced will perform routine checks of the student measures and standard errors received from members using the item-level data included in the members’ data.