Chapter 4 Test Design

4.1 Introduction

The intent of this chapter is to show how the assessment design supports the purposes of Smarter Balanced interim assessments. Test design entails developing a test philosophy (i.e., Theory of Action), identifying test purposes, and determining the targeted examinee populations, test specifications, item pool design, and other features such as test delivery (Schmeiser & Welch, 2006). The Smarter Balanced Theory of Action, test purposes, and the targeted examinee population were outlined in the introduction of this report and in Chapter 1. The following section provides information about how content specifications, item specifications, and item pool design were established for the interim assessment’s content structure.

4.2 Content Structure

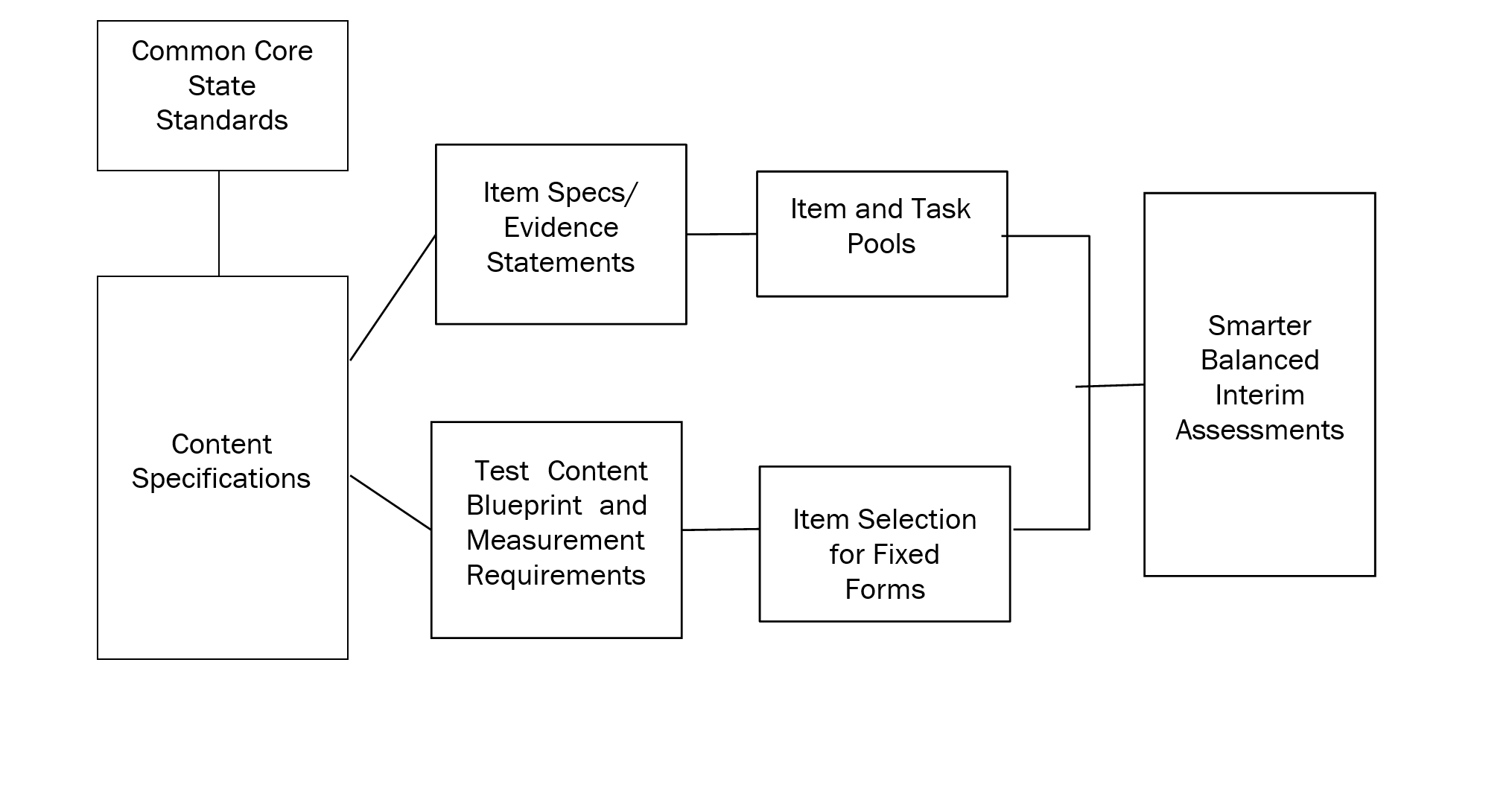

Figure 4.1 briefly encapsulates the Smarter Balanced interim test design process. An early goal in the development of Smarter Balanced assessments was to align the assessment with the expectations of content, rigor, and performance that comprise the Common Core State Standards (CCSS). Because the Common Core State Standards were not specifically developed for assessment, they contain extensive rationale and information concerning instruction. Therefore, adopting previous practices used by many state programs, Smarter Balanced content experts produced content specifications in ELA/literacy and mathematics, distilling assessment-focused elements from the CCSS. The Smarter Balanced content specifications for the interim comprehensive assessment (ICA) and the interim assessment blocks (IABs) are the same as those for the summative assessments and can be found in the Smarter Balanced Content Specifications (Smarter Balanced, 2017a,b).

Figure 4.1: Components of Smarter Balanced Test Design

Item development specifications (https://portal.smarterbalanced.org/library-home/) are then based on content specifications. Each item is aligned to a specific claim and target and to a Common Core State Standard.

There are four broad claims within each of the two subject areas (ELA/literacy and mathematics) in grades 3 to 8 and high school. Within each claim, there are a number of assessment targets. The claims in ELA/literacy and mathematics are given in Table 4.1.

| Claim | ELA/Literacy | Mathematics |

|---|---|---|

| 1 | Reading | Concepts and Procedures |

| 2 | Writing | Problem Solving |

| 3 | Speaking/Listening | Communicating Reasoning |

| 4 | Research | Modeling and Data Analysis |

Currently, only the listening part of ELA/literacy claim 3 is assessed. For the ICA in mathematics, claims 2 and 4 are reported together, which results in three reporting categories for mathematics. For the IABs, the claim/target structure is used in test specification, and results are reported for the overall IAB.

Because of the breadth in coverage of the individual claims, targets, and target clusters within each claim, evidence statements define more specific performance expectations. The relationship between targets and Common Core State Standards elements is made explicit in the Smarter Balanced Content Specifications (Smarter Balanced, 2017a,b). The claim/target hierarchy is the basis for summative and ICA test structure. IABs are based on target clusters or content domains that correspond to skill clusters commonly taught as a group.

4.3 Item and Task Development

All items and tasks in the interim assessments were drawn from the same pool of items that are used for the summative assessments. Please refer to the sections on item and task development and field testing in Chapter 4 of the 2018-19 summative technical report (Smarter Balanced, 2020) for details.

4.4 Test Blueprints

Test specifications and blueprints define the knowledge, skills, and abilities intended to be measured on each student’s test event. A blueprint also specifies how skills are sampled from a set of content standards (i.e., the CCSS). Other important factors, such as depth of knowledge (DOK), are also specified. Specifically, a test blueprint is a formal document that guides the development and assembly of an assessment by explicating the following types of essential information:

- content (claims and assessment targets) that is included for each assessed subject and grade, across various levels of the system (student, classroom, school, district, state);

- the relative emphasis of content standards generally indicated as the number of items or percentage of points per claim and assessment target;

- task models used or required, which communicate to item developers how to measure each claim and assessment target and communicate to teachers and students about learning expectations; and

- depth of knowledge (DOK), indicating the complexity of item types for each claim and assessment target.

The following sections provide more details about the interim test blueprints.

4.4.1 Performance Task (PT) and Non-Performance Task (Non-PT) Components

Each ICA and some IABs contain performance tasks. Performance tasks measure a student’s ability to integrate knowledge and skills across multiple standards. Performance tasks measure capacities such as essay writing, research skills, and complex analysis, which are not as easy to assess with individual, discrete items. Each ELA/literacy performance task in the ICAs has a set of related stimuli presented with two or three research items and an essay. In this respect, the ICAs for 2018–19 differ from the summative assessments for 2018–19. The PTs in the summative assessments for ELA/literacy in 2018–19 contain only one research item. Each mathematics performance task has four to six items relating to a central problem or stimulus.

The ICA for each subject consists of two parts: a performance task (PT) part and a non-performance task (non-PT) part. Both parts are online. The non-PT section in an ICA is the counterpart of the computer adaptive section in the summative assessment. As with the summative assessment, no order is imposed on ICA components; either the non-PT or PT portion can be administered to students first.

Performance tasks on IABs are usually stand-alone tasks with four to six items. They are given separately from non-PT IABs and are provided primarily to provide practice for students and professional development for teachers in hand scoring and understanding task demands.

4.4.2 Interim Comprehensive Assessments (ICAs)

The ICA blueprints are very similar to the summative blueprints with exceptions generally due to the fact that ICAs are fixed forms, while the summative assessments are computer adaptive. Typically, blueprints for computer adaptive tests specify a range, rather than an exact number of items or points, that must be delivered for each test content area. Blueprints for fixed forms can specify an exact number. ICA blueprints for 2018-19 are available online (Smarter Balanced, 2019e,e). Summative blueprints for the 2018-19 assessments are also available online (Smarter Balanced, 2018d,e).

Beginning with the 2018–19 school year, the number of research (claim 4) items in the ELA/literacy summative assessment performance task were reduced from two or three to one, and the number of CAT research items were increased to compensate. Due to resource and time constraints, the ELA/literacy performance tasks of the ICAs used in 2018–19 were not similarly changed, remaining similar in this respect to the summative blueprints used in previous administrations (Smarter Balanced, 2016g,h).

For each designated grade range (3 to 5, 6 to 8, and high school), the ICA blueprints document the claims that comprise reporting categories, the stimuli used, the number of items by non-PT or PT, and the total number of items by claim. Details are given separately for each grade and include claim, assessment target, DOK, assessment type (non-PT/PT), and the total number of items. Assessment targets are nested within claims and represent a more detailed specification of content. Note that in addition to the nested hierarchical structure, each blueprint also specifies a number of rules applied at global or claim levels. Most of these specifications are in the footnotes, which constitute important parts of the test blueprints.

4.4.3 Interim Assessment Blocks (IABs)

Interim assessment blocks are designed by teams of content experts to reflect groups of skills most likely to be addressed in instructional units. These tests are short and contain a focused set of skills. They provide an indicator of whether a student is clearly above or below standard, or whether information is not sufficient to make a judgment with regard to meeting the standard. As noted in the overview, focused interim assessment blocks (FIABs) became available after 2018 and are not included in this report.

Blueprints are specified for all IABs. Each IAB blueprint contains information about the claim(s), assessment target(s), and DOK level(s) addressed by the items in that block, as well as the numbers of items allocated to each of those categories. Other more subject-specific information is also included. For example, the ELA/literacy IAB blueprints incorporate details on passage length and scoring of responses, while the mathematics IAB blueprints specify to what extent the relevant task models are represented in each block. Details are given separately for each grade. The details include claim, assessment target, DOK, assessment type (non-PTs/PT), and the total number of items. IAB blueprints for 2018-19 are available online (Smarter Balanced, 2019b,c).

4.5 Test Maps

A test map is provided for every interim assessment. Test maps for 2018-19 are provided in appendices to this report. Table 4.4, in a later section of this chapter, provides the meaning of the abbreviations that are used to represent item types in the test maps. Test maps include the following information for each interim assessment and item in the test:

For each ICA (See Appendix A for ELA/literacy and Appendix B for mathematics):

- Grade

- Part (performance task [PT] or not a performance task [non-PT])

- For each item in the ICA:

- Item sequence

- Claim

- Target

- Depth of knowledge (DOK)

- Item type

- Point value

For each IAB (See Appendix C for ELA/literacy and Appendix D for mathematics):

- Grade

- Block name (name of the IAB)

- For each item in the IAB:

- Item sequence

- Claim

- Target

- Depth of knowledge (DOK)

- Item type

- Point value

4.6 Composition of Interim Item Pools

This section presents counts of items in the IABs and ICAs by claim and item type and also presents a summary of item statistics (difficulty and discrimination) for each IAB and ICA. For each interim assessment, the number of items by claim matches the blueprint specifications.

4.6.1 Composition by Claim

The numbers of items per claim in each IAB is shown in Table 4.2 and Table 4.3. The number of items by claim in each ICA is shown in Table 4.4.

| Grade | Interim Assessment Block Name | Claim 1 | Claim 2 | Claim 3 | Claim 4 | Total # Items |

|---|---|---|---|---|---|---|

| 3 | Brief Writes | 0 | 6 | 0 | 0 | 6 |

| Editing | 0 | 15 | 0 | 0 | 15 | |

| Language and Vocabulary Use | 0 | 15 | 0 | 0 | 15 | |

| Listen/Interpret | 0 | 0 | 0 | 15 | 15 | |

| Performance Task | 0 | 2 | 3 | 0 | 5 | |

| Read Informational Texts | 16 | 0 | 0 | 0 | 16 | |

| Read Literary Texts | 15 | 0 | 0 | 0 | 15 | |

| Research | 0 | 0 | 18 | 0 | 18 | |

| Revision | 0 | 15 | 0 | 0 | 15 | |

| 4 | Brief Writes | 0 | 6 | 0 | 0 | 6 |

| Editing | 0 | 15 | 0 | 0 | 15 | |

| Language and Vocabulary Use | 0 | 15 | 0 | 0 | 15 | |

| Listen/Interpret | 0 | 0 | 0 | 14 | 14 | |

| Performance Task | 0 | 2 | 3 | 0 | 5 | |

| Read Informational Texts | 14 | 0 | 0 | 0 | 14 | |

| Read Literary Texts | 15 | 0 | 0 | 0 | 15 | |

| Research | 0 | 0 | 18 | 0 | 18 | |

| Revision | 0 | 15 | 0 | 0 | 15 | |

| 5 | Brief Writes | 0 | 6 | 0 | 0 | 6 |

| Editing | 0 | 14 | 0 | 0 | 14 | |

| Language and Vocabulary Use | 0 | 15 | 0 | 0 | 15 | |

| Listen/Interpret | 0 | 0 | 0 | 14 | 14 | |

| Performance Task | 0 | 2 | 3 | 0 | 5 | |

| Read Informational Texts | 15 | 0 | 0 | 0 | 15 | |

| Read Literary Texts | 14 | 0 | 0 | 0 | 14 | |

| Research | 0 | 0 | 18 | 0 | 18 | |

| Revision | 0 | 15 | 0 | 0 | 15 | |

| 6 | Brief Writes | 0 | 6 | 0 | 0 | 6 |

| Editing | 0 | 14 | 0 | 0 | 14 | |

| Language and Vocabulary Use | 0 | 15 | 0 | 0 | 15 | |

| Listen/Interpret | 0 | 0 | 0 | 15 | 15 | |

| Performance Task | 0 | 2 | 2 | 0 | 4 | |

| Read Informational Texts | 16 | 0 | 0 | 0 | 16 | |

| Read Literary Texts | 12 | 0 | 0 | 0 | 12 | |

| Research | 0 | 0 | 18 | 0 | 18 | |

| Revision | 0 | 15 | 0 | 0 | 15 | |

| 7 | Brief Writes | 0 | 6 | 0 | 0 | 6 |

| Editing | 0 | 14 | 0 | 0 | 14 | |

| Language and Vocabulary Use | 0 | 15 | 0 | 0 | 15 | |

| Listen/Interpret | 0 | 0 | 0 | 15 | 15 | |

| Performance Task | 0 | 2 | 3 | 0 | 5 | |

| Read Informational Texts | 16 | 0 | 0 | 0 | 16 | |

| Read Literary Texts | 15 | 0 | 0 | 0 | 15 | |

| Research | 0 | 0 | 18 | 0 | 18 | |

| Revision | 0 | 15 | 0 | 0 | 15 | |

| 8 | Brief Writes | 0 | 6 | 0 | 0 | 6 |

| Edit/Revise | 0 | 14 | 0 | 0 | 14 | |

| Listen/Interpret | 0 | 0 | 0 | 15 | 15 | |

| Performance Task | 0 | 2 | 3 | 0 | 5 | |

| Read Informational Texts | 16 | 0 | 0 | 0 | 16 | |

| Read Literary Texts | 16 | 0 | 0 | 0 | 16 | |

| Research | 0 | 0 | 18 | 0 | 18 | |

| 11 | Brief Writes | 0 | 6 | 0 | 0 | 6 |

| Editing | 0 | 15 | 0 | 0 | 15 | |

| Language and Vocabulary | 0 | 15 | 0 | 0 | 15 | |

| Listen/Interpret | 0 | 0 | 0 | 15 | 15 | |

| Performance Task | 0 | 2 | 2 | 0 | 4 | |

| Read Informational Texts | 15 | 0 | 0 | 0 | 15 | |

| Read Literary Texts | 16 | 0 | 0 | 0 | 16 | |

| Research | 0 | 0 | 18 | 0 | 18 | |

| Revision | 0 | 15 | 0 | 0 | 15 |

| Grade | Interim Assessment Block Name | Claim 1 | Claim 2 | Claim 3 | Claim 4 | Total # items |

|---|---|---|---|---|---|---|

| 3 | Geometry | 12 | 0 | 0 | 0 | 12 |

| Measurement and Data | 12 | 1 | 1 | 1 | 15 | |

| Number and Operations – Fractions | 13 | 0 | 0 | 1 | 14 | |

| Number and Operations in Base Ten | 12 | 2 | 0 | 0 | 14 | |

| Operations and Algebraic Thinking | 12 | 1 | 1 | 1 | 15 | |

| Performance Task | 0 | 2 | 2 | 2 | 6 | |

| 4 | Geometry | 11 | 0 | 0 | 0 | 11 |

| Measurement and Data | 13 | 1 | 1 | 0 | 15 | |

| Number and Operations – Fractions | 12 | 1 | 0 | 2 | 15 | |

| Number and Operations in Base Ten | 12 | 1 | 0 | 2 | 15 | |

| Operations and Algebraic Thinking | 9 | 2 | 3 | 2 | 16 | |

| Performance Task | 0 | 2 | 3 | 1 | 6 | |

| 5 | Geometry | 9 | 1 | 1 | 2 | 13 |

| Measurement and Data | 9 | 3 | 1 | 1 | 14 | |

| Number and Operations – Fractions | 11 | 1 | 1 | 2 | 15 | |

| Number and Operations in Base Ten | 11 | 1 | 1 | 2 | 15 | |

| Operations and Algebraic Thinking | 13 | 1 | 1 | 0 | 15 | |

| Performance Task | 0 | 2 | 2 | 2 | 6 | |

| 6 | Expressions and Equations | 13 | 1 | 1 | 1 | 16 |

| Geometry | 11 | 1 | 1 | 1 | 14 | |

| Performance Task | 0 | 2 | 2 | 2 | 6 | |

| Ratios and Proportional Relationships | 11 | 1 | 0 | 1 | 13 | |

| Statistics and Probability | 13 | 0 | 0 | 0 | 13 | |

| The Number System | 13 | 1 | 0 | 1 | 15 | |

| 7 | Expressions and Equations | 12 | 1 | 1 | 1 | 15 |

| Geometry | 11 | 2 | 0 | 0 | 13 | |

| Performance Task | 0 | 2 | 2 | 2 | 6 | |

| Ratios and Proportional Relationships | 10 | 1 | 1 | 1 | 13 | |

| Statistics and Probability | 13 | 0 | 2 | 0 | 15 | |

| The Number System | 11 | 0 | 1 | 2 | 14 | |

| 8 | Expressions & Equations I | 9 | 3 | 0 | 2 | 14 |

| Expressions & Equations II | 10 | 1 | 1 | 1 | 13 | |

| Functions | 11 | 1 | 1 | 2 | 15 | |

| Geometry | 12 | 0 | 1 | 1 | 14 | |

| Performance Task | 0 | 2 | 2 | 2 | 6 | |

| The Number System | 13 | 0 | 0 | 0 | 13 | |

| 11 | Algebra and Functions I - Linear Functions, Equations, and Inequalities | 11 | 2 | 1 | 1 | 15 |

| Algebra and Functions II - Quadratic Functions, Equations, and Inequalities | 12 | 0 | 2 | 1 | 15 | |

| Geometry | 11 | 1 | 0 | 3 | 15 | |

| Geometry Congruence | 0 | 0 | 0 | 12 | 12 | |

| Geometry Measurement and Modeling | 0 | 4 | 6 | 0 | 10 | |

| Interpreting Functions | 10 | 1 | 2 | 1 | 14 | |

| Number and Quantity | 11 | 0 | 1 | 3 | 15 | |

| Performance Task | 0 | 1 | 3 | 2 | 6 | |

| Seeing Structure in Expressions and Polynomial Expressions | 10 | 0 | 1 | 4 | 15 | |

| Statistics and Probability | 6 | 3 | 3 | 0 | 12 |

| Subject | Grade | Claim 1 | Claim 2 | Claim 3 | Claim 4 | Total # items |

|---|---|---|---|---|---|---|

| ELA/Literacy | 3 | 20 | 11 | 9 | 8 | 48 |

| 4 | 20 | 12 | 9 | 8 | 49 | |

| 5 | 19 | 12 | 9 | 8 | 48 | |

| 6 | 21 | 12 | 9 | 7 | 49 | |

| 7 | 20 | 12 | 9 | 8 | 49 | |

| 8 | 21 | 12 | 9 | 8 | 50 | |

| 11 | 19 | 11 | 9 | 7 | 46 | |

| Mathematics | 3 | 20 | 4 | 8 | 5 | 37 |

| 4 | 20 | 4 | 6 | 6 | 36 | |

| 5 | 20 | 4 | 8 | 5 | 37 | |

| 6 | 19 | 6 | 8 | 3 | 36 | |

| 7 | 20 | 4 | 8 | 5 | 37 | |

| 8 | 20 | 5 | 8 | 4 | 37 | |

| 11 | 21 | 3 | 8 | 6 | 38 |

4.6.2 Composition by Item Type

The Consortium develops many different types of items beyond the traditional multiple-choice item. This is done to measure claims and assessment targets with varying degrees of complexity by allowing students to respond in a variety of ways rather than simply recognizing a correct response. The item types used in Smarter Balanced interim assessments are listed in Table 4.5, along with indications of the subject areas in which each item type is used.

| Item Types | ELA/Literacy | Mathematics |

|---|---|---|

| Multiple Choice (MC) | X | X |

| Multiple Select (MS) | X | X |

| Evidence-Based Selected Response (EBSR) | X | |

| Match Interaction (MI) | X | X |

| Hot Text (HTQ) | X | |

| Short Answer Text Response (SA) | X | X |

| Essay/Writing Extended Response (WER) | X | |

| Equation Response (EQ) | X | |

| Grid Item Response (GI) | X | |

| Table Interaction (TI) | X |

The frequency of item types by IAB is shown in Table 4.6 (ELA/literacy) and Table 4.7 (mathematics). The frequency of item types by claim for ICAs is shown in Table 4.8 (ELA/literacy) and Table 4.9 (mathematics). Note that each Essay/Writing Extended Response (WER) is associated with two test items to measure student achievement. The essay response is scored on three rubrics, two of which are combined (averaged), resulting in two items for each essay. The two rubrics that are combined are four-point rubrics—one for evidence/elaboration and the other for organization/purpose. The other rubric is a two-point rubric for conventions.

| Grade | Interim Assessment Block Name | MC | MS | EBSR | MI | HTQ | SA | WER | EQ | GI | TI | Total # Items |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 3 | Brief Writes | 0 | 0 | 0 | 0 | 0 | 6 | 0 | 0 | 0 | 0 | 6 |

| Editing | 6 | 2 | 0 | 0 | 7 | 0 | 0 | 0 | 0 | 0 | 15 | |

| Language and Vocabulary Use | 7 | 6 | 0 | 0 | 2 | 0 | 0 | 0 | 0 | 0 | 15 | |

| Listen/Interpret | 7 | 1 | 3 | 4 | 0 | 0 | 0 | 0 | 0 | 0 | 15 | |

| Performance Task | 0 | 0 | 0 | 1 | 0 | 2 | 2 | 0 | 0 | 0 | 5 | |

| Read Informational Texts | 8 | 1 | 2 | 0 | 4 | 1 | 0 | 0 | 0 | 0 | 16 | |

| Read Literary Texts | 6 | 0 | 3 | 0 | 5 | 1 | 0 | 0 | 0 | 0 | 15 | |

| Research | 13 | 2 | 0 | 0 | 3 | 0 | 0 | 0 | 0 | 0 | 18 | |

| Revision | 6 | 5 | 0 | 0 | 4 | 0 | 0 | 0 | 0 | 0 | 15 | |

| 4 | Brief Writes | 0 | 0 | 0 | 0 | 0 | 6 | 0 | 0 | 0 | 0 | 6 |

| Editing | 9 | 3 | 0 | 0 | 3 | 0 | 0 | 0 | 0 | 0 | 15 | |

| Language and Vocabulary Use | 7 | 4 | 0 | 0 | 4 | 0 | 0 | 0 | 0 | 0 | 15 | |

| Listen/Interpret | 7 | 3 | 2 | 2 | 0 | 0 | 0 | 0 | 0 | 0 | 14 | |

| Performance Task | 0 | 0 | 0 | 1 | 0 | 2 | 2 | 0 | 0 | 0 | 5 | |

| Read Informational Texts | 5 | 4 | 1 | 0 | 3 | 1 | 0 | 0 | 0 | 0 | 14 | |

| Read Literary Texts | 7 | 3 | 2 | 0 | 2 | 1 | 0 | 0 | 0 | 0 | 15 | |

| Research | 11 | 5 | 0 | 1 | 1 | 0 | 0 | 0 | 0 | 0 | 18 | |

| Revision | 10 | 3 | 0 | 0 | 2 | 0 | 0 | 0 | 0 | 0 | 15 | |

| 5 | Brief Writes | 0 | 0 | 0 | 0 | 0 | 6 | 0 | 0 | 0 | 0 | 6 |

| Editing | 8 | 2 | 0 | 0 | 4 | 0 | 0 | 0 | 0 | 0 | 14 | |

| Language and Vocabulary Use | 5 | 5 | 0 | 0 | 5 | 0 | 0 | 0 | 0 | 0 | 15 | |

| Listen/Interpret | 6 | 2 | 4 | 2 | 0 | 0 | 0 | 0 | 0 | 0 | 14 | |

| Performance Task | 0 | 1 | 0 | 0 | 0 | 2 | 2 | 0 | 0 | 0 | 5 | |

| Read Informational Texts | 6 | 3 | 1 | 0 | 3 | 2 | 0 | 0 | 0 | 0 | 15 | |

| Read Literary Texts | 4 | 3 | 3 | 0 | 3 | 1 | 0 | 0 | 0 | 0 | 14 | |

| Research | 8 | 8 | 0 | 0 | 2 | 0 | 0 | 0 | 0 | 0 | 18 | |

| Revision | 8 | 4 | 0 | 0 | 3 | 0 | 0 | 0 | 0 | 0 | 15 | |

| 6 | Brief Writes | 0 | 0 | 0 | 0 | 0 | 6 | 0 | 0 | 0 | 0 | 6 |

| Editing | 7 | 1 | 0 | 0 | 6 | 0 | 0 | 0 | 0 | 0 | 14 | |

| Language and Vocabulary Use | 8 | 7 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 15 | |

| Listen/Interpret | 5 | 4 | 5 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 15 | |

| Performance Task | 0 | 0 | 0 | 0 | 0 | 2 | 2 | 0 | 0 | 0 | 4 | |

| Read Informational Texts | 3 | 5 | 4 | 0 | 3 | 1 | 0 | 0 | 0 | 0 | 16 | |

| Read Literary Texts | 6 | 2 | 1 | 0 | 2 | 1 | 0 | 0 | 0 | 0 | 12 | |

| Research | 6 | 5 | 0 | 0 | 7 | 0 | 0 | 0 | 0 | 0 | 18 | |

| Revision | 11 | 3 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 15 | |

| 7 | Brief Writes | 0 | 0 | 0 | 0 | 0 | 6 | 0 | 0 | 0 | 0 | 6 |

| Editing | 8 | 4 | 0 | 0 | 2 | 0 | 0 | 0 | 0 | 0 | 14 | |

| Language and Vocabulary Use | 5 | 6 | 0 | 0 | 4 | 0 | 0 | 0 | 0 | 0 | 15 | |

| Listen/Interpret | 9 | 3 | 3 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 15 | |

| Performance Task | 0 | 0 | 0 | 1 | 0 | 2 | 2 | 0 | 0 | 0 | 5 | |

| Read Informational Texts | 2 | 6 | 3 | 0 | 4 | 1 | 0 | 0 | 0 | 0 | 16 | |

| Read Literary Texts | 7 | 2 | 4 | 0 | 2 | 0 | 0 | 0 | 0 | 0 | 15 | |

| Research | 5 | 6 | 0 | 3 | 4 | 0 | 0 | 0 | 0 | 0 | 18 | |

| Revision | 9 | 3 | 0 | 0 | 3 | 0 | 0 | 0 | 0 | 0 | 15 | |

| 8 | Brief Writes | 0 | 0 | 0 | 0 | 0 | 6 | 0 | 0 | 0 | 0 | 6 |

| Edit/Revise | 7 | 4 | 0 | 0 | 3 | 0 | 0 | 0 | 0 | 0 | 14 | |

| Listen/Interpret | 11 | 1 | 2 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 15 | |

| Performance Task | 0 | 0 | 0 | 1 | 0 | 2 | 2 | 0 | 0 | 0 | 5 | |

| Read Informational Texts | 3 | 5 | 1 | 0 | 5 | 2 | 0 | 0 | 0 | 0 | 16 | |

| Read Literary Texts | 8 | 4 | 1 | 0 | 2 | 1 | 0 | 0 | 0 | 0 | 16 | |

| Research | 7 | 2 | 0 | 1 | 8 | 0 | 0 | 0 | 0 | 0 | 18 | |

| 11 | Brief Writes | 0 | 0 | 0 | 0 | 0 | 6 | 0 | 0 | 0 | 0 | 6 |

| Editing | 6 | 2 | 0 | 0 | 7 | 0 | 0 | 0 | 0 | 0 | 15 | |

| Language and Vocabulary | 5 | 4 | 0 | 0 | 6 | 0 | 0 | 0 | 0 | 0 | 15 | |

| Listen/Interpret | 10 | 4 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 15 | |

| Performance Task | 0 | 0 | 0 | 0 | 0 | 2 | 2 | 0 | 0 | 0 | 4 | |

| Read Informational Texts | 6 | 1 | 2 | 0 | 4 | 2 | 0 | 0 | 0 | 0 | 15 | |

| Read Literary Texts | 9 | 2 | 1 | 0 | 3 | 1 | 0 | 0 | 0 | 0 | 16 | |

| Research | 9 | 2 | 0 | 0 | 7 | 0 | 0 | 0 | 0 | 0 | 18 | |

| Revision | 9 | 4 | 0 | 0 | 2 | 0 | 0 | 0 | 0 | 0 | 15 |

| Grade | Interim Assessment Block Name | MC | MS | EBSR | MI | HTQ | SA | WER | EQ | GI | TI | Total # Items |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 3 | Geometry | 0 | 0 | 0 | 10 | 0 | 0 | 0 | 2 | 0 | 0 | 12 |

| Measurement and Data | 5 | 1 | 0 | 0 | 0 | 0 | 0 | 9 | 0 | 0 | 15 | |

| Number and Operations – Fractions | 3 | 1 | 0 | 1 | 0 | 0 | 0 | 9 | 0 | 0 | 14 | |

| Number and Operations in Base Ten | 1 | 0 | 0 | 1 | 0 | 0 | 0 | 12 | 0 | 0 | 14 | |

| Operations and Algebraic Thinking | 2 | 0 | 0 | 2 | 0 | 0 | 0 | 9 | 0 | 2 | 15 | |

| Performance Task | 0 | 0 | 0 | 0 | 0 | 4 | 0 | 2 | 0 | 0 | 6 | |

| 4 | Geometry | 0 | 0 | 0 | 11 | 0 | 0 | 0 | 0 | 0 | 0 | 11 |

| Measurement and Data | 0 | 0 | 0 | 5 | 0 | 0 | 0 | 8 | 0 | 2 | 15 | |

| Number and Operations – Fractions | 0 | 2 | 0 | 5 | 0 | 0 | 0 | 8 | 0 | 0 | 15 | |

| Number and Operations in Base Ten | 5 | 2 | 0 | 2 | 0 | 0 | 0 | 6 | 0 | 0 | 15 | |

| Operations and Algebraic Thinking | 6 | 0 | 0 | 3 | 0 | 0 | 0 | 7 | 0 | 0 | 16 | |

| Performance Task | 1 | 0 | 0 | 0 | 0 | 3 | 0 | 1 | 0 | 1 | 6 | |

| 5 | Geometry | 8 | 1 | 0 | 4 | 0 | 0 | 0 | 0 | 0 | 0 | 13 |

| Measurement and Data | 2 | 0 | 0 | 1 | 0 | 0 | 0 | 11 | 0 | 0 | 14 | |

| Number and Operations – Fractions | 9 | 2 | 0 | 0 | 0 | 0 | 0 | 4 | 0 | 0 | 15 | |

| Number and Operations in Base Ten | 6 | 0 | 0 | 3 | 0 | 0 | 0 | 6 | 0 | 0 | 15 | |

| Operations and Algebraic Thinking | 10 | 0 | 0 | 0 | 0 | 0 | 0 | 5 | 0 | 0 | 15 | |

| Performance Task | 0 | 0 | 0 | 0 | 0 | 2 | 0 | 3 | 0 | 1 | 6 | |

| 6 | Expressions and Equations | 1 | 5 | 0 | 2 | 0 | 0 | 0 | 8 | 0 | 0 | 16 |

| Geometry | 1 | 1 | 0 | 0 | 0 | 0 | 0 | 12 | 0 | 0 | 14 | |

| Performance Task | 0 | 0 | 0 | 0 | 0 | 2 | 0 | 4 | 0 | 0 | 6 | |

| Ratios and Proportional Relationships | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 12 | 0 | 0 | 13 | |

| Statistics and Probability | 3 | 0 | 0 | 7 | 0 | 0 | 0 | 3 | 0 | 0 | 13 | |

| The Number System | 1 | 1 | 0 | 3 | 0 | 0 | 0 | 10 | 0 | 0 | 15 | |

| 7 | Expressions and Equations | 6 | 3 | 0 | 0 | 0 | 0 | 0 | 6 | 0 | 0 | 15 |

| Geometry | 0 | 1 | 0 | 3 | 0 | 0 | 0 | 9 | 0 | 0 | 13 | |

| Performance Task | 1 | 0 | 0 | 0 | 0 | 2 | 0 | 1 | 0 | 2 | 6 | |

| Ratios and Proportional Relationships | 4 | 1 | 0 | 1 | 0 | 0 | 0 | 7 | 0 | 0 | 13 | |

| Statistics and Probability | 5 | 1 | 0 | 4 | 0 | 0 | 0 | 5 | 0 | 0 | 15 | |

| The Number System | 4 | 1 | 0 | 1 | 0 | 0 | 0 | 7 | 0 | 1 | 14 | |

| 8 | Expressions and Equations I | 4 | 2 | 0 | 0 | 0 | 0 | 0 | 8 | 0 | 0 | 14 |

| Expressions and Equations II | 4 | 0 | 0 | 1 | 0 | 0 | 0 | 6 | 0 | 2 | 13 | |

| Functions | 6 | 3 | 0 | 2 | 0 | 0 | 0 | 4 | 0 | 0 | 15 | |

| Geometry | 4 | 1 | 0 | 4 | 0 | 0 | 0 | 5 | 0 | 0 | 14 | |

| Performance Task | 0 | 0 | 0 | 0 | 0 | 2 | 0 | 3 | 0 | 1 | 6 | |

| The Number System | 6 | 1 | 0 | 3 | 0 | 0 | 0 | 3 | 0 | 0 | 13 | |

| 11 | Algebra and Functions I - Linear Functions, Equations, and Inequalities | 7 | 0 | 0 | 3 | 0 | 0 | 0 | 5 | 0 | 0 | 15 |

| Algebra and Functions II - Quadratic Functions, Equations, and Inequalities | 6 | 1 | 0 | 6 | 0 | 0 | 0 | 2 | 0 | 0 | 15 | |

| Geometry | 2 | 2 | 0 | 4 | 0 | 0 | 0 | 7 | 0 | 0 | 15 | |

| Geometry Congruence | 9 | 0 | 0 | 2 | 0 | 0 | 0 | 1 | 0 | 0 | 12 | |

| Geometry Measurement and Modeling | 4 | 0 | 0 | 1 | 0 | 0 | 0 | 5 | 0 | 0 | 10 | |

| Interpreting Functions | 5 | 1 | 0 | 6 | 0 | 0 | 0 | 2 | 0 | 0 | 14 | |

| Number and Quantity | 10 | 1 | 0 | 4 | 0 | 0 | 0 | 0 | 0 | 0 | 15 | |

| Performance Task | 1 | 0 | 0 | 0 | 0 | 4 | 0 | 1 | 0 | 0 | 6 | |

| Seeing Structure in Expressions and Polynomial Expressions | 8 | 0 | 0 | 1 | 0 | 0 | 0 | 6 | 0 | 0 | 15 | |

| Statistics and Probability | 7 | 0 | 0 | 4 | 0 | 0 | 0 | 1 | 0 | 0 | 12 |

| Grade | Claim | MC | MS | EBSR | MI | HTQ | SA | WER | EQ | GI | TI | Total # Items |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 3 | 1 | 12 | 0 | 3 | 0 | 3 | 2 | 0 | 0 | 0 | 0 | 20 |

| 2 | 7 | 0 | 0 | 0 | 1 | 1 | 2 | 0 | 0 | 0 | 11 | |

| 3 | 3 | 1 | 2 | 3 | 0 | 0 | 0 | 0 | 0 | 0 | 9 | |

| 4 | 4 | 1 | 0 | 1 | 0 | 2 | 0 | 0 | 0 | 0 | 8 | |

| 4 | 1 | 11 | 2 | 2 | 0 | 3 | 2 | 0 | 0 | 0 | 0 | 20 |

| 2 | 7 | 0 | 0 | 0 | 2 | 1 | 2 | 0 | 0 | 0 | 12 | |

| 3 | 5 | 2 | 1 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 9 | |

| 4 | 3 | 1 | 0 | 2 | 0 | 2 | 0 | 0 | 0 | 0 | 8 | |

| 5 | 1 | 6 | 3 | 4 | 0 | 4 | 2 | 0 | 0 | 0 | 0 | 19 |

| 2 | 7 | 1 | 0 | 0 | 1 | 1 | 2 | 0 | 0 | 0 | 12 | |

| 3 | 4 | 0 | 3 | 2 | 0 | 0 | 0 | 0 | 0 | 0 | 9 | |

| 4 | 4 | 2 | 0 | 0 | 0 | 2 | 0 | 0 | 0 | 0 | 8 | |

| 6 | 1 | 10 | 3 | 2 | 0 | 4 | 2 | 0 | 0 | 0 | 0 | 21 |

| 2 | 6 | 2 | 0 | 0 | 1 | 1 | 2 | 0 | 0 | 0 | 12 | |

| 3 | 5 | 1 | 2 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 9 | |

| 4 | 2 | 0 | 0 | 0 | 3 | 2 | 0 | 0 | 0 | 0 | 7 | |

| 7 | 1 | 7 | 3 | 4 | 0 | 4 | 2 | 0 | 0 | 0 | 0 | 20 |

| 2 | 5 | 2 | 0 | 0 | 2 | 1 | 2 | 0 | 0 | 0 | 12 | |

| 3 | 6 | 3 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 9 | |

| 4 | 4 | 0 | 0 | 1 | 1 | 2 | 0 | 0 | 0 | 0 | 8 | |

| 8 | 1 | 11 | 2 | 2 | 0 | 4 | 2 | 0 | 0 | 0 | 0 | 21 |

| 2 | 5 | 2 | 0 | 0 | 2 | 1 | 2 | 0 | 0 | 0 | 12 | |

| 3 | 9 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 9 | |

| 4 | 3 | 0 | 0 | 1 | 2 | 2 | 0 | 0 | 0 | 0 | 8 | |

| 11 | 1 | 12 | 0 | 1 | 0 | 4 | 2 | 0 | 0 | 0 | 0 | 19 |

| 2 | 5 | 0 | 0 | 0 | 3 | 1 | 2 | 0 | 0 | 0 | 11 | |

| 3 | 6 | 2 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 9 | |

| 4 | 5 | 0 | 0 | 0 | 0 | 2 | 0 | 0 | 0 | 0 | 7 | |

| 3 | 1 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 17 | 0 | 2 | 20 |

| 2 | 1 | 0 | 0 | 0 | 0 | 1 | 0 | 2 | 0 | 0 | 4 | |

| 3 | 3 | 1 | 0 | 2 | 0 | 2 | 0 | 0 | 0 | 0 | 8 | |

| 4 | 2 | 0 | 0 | 0 | 0 | 1 | 0 | 2 | 0 | 0 | 5 | |

| 4 | 1 | 2 | 0 | 0 | 5 | 0 | 0 | 0 | 13 | 0 | 0 | 20 |

| 2 | 1 | 0 | 0 | 1 | 0 | 0 | 0 | 1 | 0 | 1 | 4 | |

| 3 | 3 | 1 | 0 | 0 | 0 | 1 | 0 | 1 | 0 | 0 | 6 | |

| 4 | 1 | 0 | 0 | 0 | 0 | 2 | 0 | 3 | 0 | 0 | 6 | |

| 5 | 1 | 11 | 0 | 0 | 5 | 0 | 0 | 0 | 4 | 0 | 0 | 20 |

| 2 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 3 | 0 | 0 | 4 | |

| 3 | 3 | 2 | 0 | 0 | 0 | 2 | 0 | 1 | 0 | 0 | 8 | |

| 4 | 3 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 1 | 5 | |

| 6 | 1 | 1 | 2 | 0 | 4 | 0 | 0 | 0 | 12 | 0 | 0 | 19 |

| 2 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 5 | 0 | 0 | 6 | |

| 3 | 3 | 1 | 0 | 1 | 0 | 1 | 0 | 2 | 0 | 0 | 8 | |

| 4 | 0 | 1 | 0 | 0 | 0 | 1 | 0 | 1 | 0 | 0 | 3 | |

| 7 | 1 | 5 | 4 | 0 | 2 | 0 | 0 | 0 | 9 | 0 | 0 | 20 |

| 2 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 3 | 0 | 1 | 4 | |

| 3 | 5 | 0 | 0 | 0 | 0 | 2 | 0 | 1 | 0 | 0 | 8 | |

| 4 | 3 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 2 | 5 | |

| 8 | 1 | 7 | 2 | 0 | 2 | 0 | 0 | 0 | 9 | 0 | 0 | 20 |

| 2 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 3 | 0 | 1 | 5 | |

| 3 | 6 | 0 | 0 | 0 | 0 | 2 | 0 | 0 | 0 | 0 | 8 | |

| 4 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 3 | 0 | 0 | 4 | |

| 11 | 1 | 11 | 0 | 0 | 5 | 0 | 0 | 0 | 5 | 0 | 0 | 21 |

| 2 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 2 | 0 | 0 | 3 | |

| 3 | 5 | 1 | 0 | 0 | 0 | 2 | 0 | 0 | 0 | 0 | 8 | |

| 4 | 0 | 1 | 0 | 1 | 0 | 3 | 0 | 1 | 0 | 0 | 6 |

| Grade | Claim | MC | MS | EBSR | MI | HTQ | SA | WER | EQ | GI | TI | Total # Items |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 3 | 1 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 17 | 0 | 2 | 20 |

| 2 | 1 | 0 | 0 | 0 | 0 | 1 | 0 | 2 | 0 | 0 | 4 | |

| 3 | 3 | 1 | 0 | 2 | 0 | 2 | 0 | 0 | 0 | 0 | 8 | |

| 4 | 2 | 0 | 0 | 0 | 0 | 1 | 0 | 2 | 0 | 0 | 5 | |

| 4 | 1 | 2 | 0 | 0 | 5 | 0 | 0 | 0 | 13 | 0 | 0 | 20 |

| 2 | 1 | 0 | 0 | 1 | 0 | 0 | 0 | 1 | 0 | 1 | 4 | |

| 3 | 3 | 1 | 0 | 0 | 0 | 1 | 0 | 1 | 0 | 0 | 6 | |

| 4 | 1 | 0 | 0 | 0 | 0 | 2 | 0 | 3 | 0 | 0 | 6 | |

| 5 | 1 | 11 | 0 | 0 | 5 | 0 | 0 | 0 | 4 | 0 | 0 | 20 |

| 2 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 3 | 0 | 0 | 4 | |

| 3 | 3 | 2 | 0 | 0 | 0 | 2 | 0 | 1 | 0 | 0 | 8 | |

| 4 | 3 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 1 | 5 | |

| 6 | 1 | 1 | 2 | 0 | 4 | 0 | 0 | 0 | 12 | 0 | 0 | 19 |

| 2 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 5 | 0 | 0 | 6 | |

| 3 | 3 | 1 | 0 | 1 | 0 | 1 | 0 | 2 | 0 | 0 | 8 | |

| 4 | 0 | 1 | 0 | 0 | 0 | 1 | 0 | 1 | 0 | 0 | 3 | |

| 7 | 1 | 5 | 4 | 0 | 2 | 0 | 0 | 0 | 9 | 0 | 0 | 20 |

| 2 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 3 | 0 | 1 | 4 | |

| 3 | 5 | 0 | 0 | 0 | 0 | 2 | 0 | 1 | 0 | 0 | 8 | |

| 4 | 3 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 2 | 5 | |

| 8 | 1 | 7 | 2 | 0 | 2 | 0 | 0 | 0 | 9 | 0 | 0 | 20 |

| 2 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 3 | 0 | 1 | 5 | |

| 3 | 6 | 0 | 0 | 0 | 0 | 2 | 0 | 0 | 0 | 0 | 8 | |

| 4 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 3 | 0 | 0 | 4 | |

| 11 | 1 | 11 | 0 | 0 | 5 | 0 | 0 | 0 | 5 | 0 | 0 | 21 |

| 2 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 2 | 0 | 0 | 3 | |

| 3 | 5 | 1 | 0 | 0 | 0 | 2 | 0 | 0 | 0 | 0 | 8 | |

| 4 | 0 | 1 | 0 | 1 | 0 | 3 | 0 | 1 | 0 | 0 | 6 |

4.6.3 Summary of Item Statistics

Summary information about the Item Response Theory (IRT) item parameters for items used in the IABs and ICAs are shown in Table 4.10 through Table 4.12. The tables display the mean and standard deviation of the IRT difficulty parameter (b-parameter) and discrimination parameter (a-parameter).

| Grade | Interim Assessment Block Name | N items | Mean b parameter | SD b parameter | Mean a parameter | SD a parameter |

|---|---|---|---|---|---|---|

| 3 | Brief Writes | 6 | 0.256 | 0.265 | 0.715 | 0.076 |

| Editing | 15 | -0.470 | 1.213 | 0.668 | 0.250 | |

| Language and Vocabulary Use | 15 | -0.748 | 0.976 | 0.671 | 0.154 | |

| Listen/Interpret | 15 | -0.710 | 0.658 | 0.633 | 0.146 | |

| Performance Task | 5 | 0.543 | 0.903 | 0.830 | 0.158 | |

| Read Informational Texts | 16 | -0.180 | 1.086 | 0.642 | 0.234 | |

| Read Literary Texts | 15 | -0.678 | 0.710 | 0.896 | 0.156 | |

| Research | 18 | -0.756 | 0.944 | 0.643 | 0.219 | |

| Revision | 15 | -0.639 | 0.857 | 0.742 | 0.180 | |

| 4 | Brief Writes | 6 | 0.642 | 0.439 | 0.657 | 0.121 |

| Editing | 15 | -0.073 | 1.378 | 0.549 | 0.191 | |

| Language and Vocabulary Use | 15 | -0.409 | 0.980 | 0.645 | 0.197 | |

| Listen/Interpret | 14 | -0.511 | 0.933 | 0.526 | 0.160 | |

| Performance Task | 5 | 0.545 | 0.750 | 0.830 | 0.167 | |

| Read Informational Texts | 14 | 0.074 | 0.873 | 0.662 | 0.226 | |

| Read Literary Texts | 15 | -0.123 | 1.040 | 0.708 | 0.274 | |

| Research | 18 | -0.158 | 0.908 | 0.587 | 0.207 | |

| Revision | 15 | -0.422 | 1.066 | 0.523 | 0.160 | |

| 5 | Brief Writes | 6 | 0.898 | 0.667 | 0.605 | 0.058 |

| Editing | 14 | 0.124 | 0.928 | 0.602 | 0.239 | |

| Language and Vocabulary Use | 15 | 0.440 | 1.140 | 0.627 | 0.240 | |

| Listen/Interpret | 14 | -0.039 | 1.017 | 0.568 | 0.142 | |

| Performance Task | 5 | 0.558 | 0.399 | 0.782 | 0.238 | |

| Read Informational Texts | 15 | 0.865 | 1.002 | 0.547 | 0.160 | |

| Read Literary Texts | 14 | 0.806 | 0.749 | 0.616 | 0.255 | |

| Research | 18 | 0.172 | 0.977 | 0.648 | 0.207 | |

| Revision | 15 | -0.151 | 0.915 | 0.600 | 0.168 | |

| 6 | Brief Writes | 6 | 1.301 | 0.535 | 0.680 | 0.121 |

| Editing | 14 | 0.639 | 1.694 | 0.559 | 0.197 | |

| Language and Vocabulary Use | 15 | 0.206 | 1.349 | 0.570 | 0.184 | |

| Listen/Interpret | 15 | 0.593 | 0.794 | 0.618 | 0.157 | |

| Performance Task | 4 | 0.819 | 0.863 | 0.917 | 0.203 | |

| Read Informational Texts | 16 | 1.010 | 0.958 | 0.632 | 0.197 | |

| Read Literary Texts | 12 | 0.257 | 0.988 | 0.606 | 0.162 | |

| Research | 18 | 0.712 | 0.726 | 0.660 | 0.237 | |

| Revision | 15 | 0.408 | 1.442 | 0.456 | 0.145 | |

| 7 | Brief Writes | 6 | 1.415 | 0.514 | 0.620 | 0.133 |

| Editing | 14 | 2.409 | 2.409 | 0.340 | 0.162 | |

| Language and Vocabulary Use | 15 | 1.045 | 1.275 | 0.570 | 0.206 | |

| Listen/Interpret | 15 | 0.594 | 1.032 | 0.486 | 0.149 | |

| Performance Task | 5 | 1.636 | 1.229 | 0.900 | 0.162 | |

| Read Informational Texts | 16 | 0.989 | 1.178 | 0.630 | 0.217 | |

| Read Literary Texts | 15 | 0.561 | 1.218 | 0.724 | 0.241 | |

| Research | 18 | 1.523 | 1.104 | 0.517 | 0.118 | |

| Revision | 15 | 0.651 | 0.641 | 0.488 | 0.173 | |

| 8 | Brief Writes | 6 | 1.540 | 0.469 | 0.673 | 0.150 |

| Edit/Revise | 14 | 0.401 | 1.043 | 0.570 | 0.218 | |

| Listen/Interpret | 15 | 0.216 | 1.184 | 0.494 | 0.153 | |

| Performance Task | 5 | 1.619 | 1.358 | 0.747 | 0.123 | |

| Read Informational Texts | 16 | 1.501 | 1.087 | 0.621 | 0.195 | |

| Read Literary Texts | 16 | 0.700 | 1.174 | 0.703 | 0.259 | |

| Research | 18 | 1.796 | 1.347 | 0.560 | 0.238 | |

| 11 | Brief Writes | 6 | 1.743 | 0.744 | 0.521 | 0.065 |

| Editing | 15 | 1.376 | 1.231 | 0.431 | 0.125 | |

| Language and Vocabulary | 15 | 0.917 | 1.389 | 0.498 | 0.180 | |

| Listen/Interpret | 15 | 0.426 | 1.178 | 0.480 | 0.081 | |

| Performance Task | 4 | 1.509 | 0.506 | 0.602 | 0.109 | |

| Read Informational Texts | 15 | 1.766 | 1.156 | 0.498 | 0.197 | |

| Read Literary Texts | 16 | 1.332 | 1.262 | 0.472 | 0.130 | |

| Research | 18 | 1.572 | 1.087 | 0.515 | 0.155 | |

| Revision | 15 | 1.051 | 1.101 | 0.491 | 0.172 |

| Grade | Interim Assessment Block Name | N items | Mean b parameter | SD b parameter | Mean a parameter | SD a parameter |

|---|---|---|---|---|---|---|

| 3 | Geometry | 12 | -1.166 | 1.440 | 0.505 | 0.175 |

| Measurement and Data | 15 | -1.201 | 0.851 | 0.878 | 0.267 | |

| Number and Operations – Fractions | 14 | -1.131 | 0.994 | 0.688 | 0.267 | |

| Number and Operations in Base Ten | 14 | -0.817 | 0.913 | 1.052 | 0.165 | |

| Operations and Algebraic Thinking | 15 | -1.491 | 1.098 | 0.896 | 0.240 | |

| Performance Task | 6 | -0.634 | 0.375 | 1.163 | 0.264 | |

| 4 | Geometry | 11 | 1.090 | 1.417 | 0.578 | 0.297 |

| Measurement and Data | 15 | 0.340 | 0.885 | 0.760 | 0.285 | |

| Number and Operations – Fractions | 15 | -0.612 | 1.044 | 0.924 | 0.328 | |

| Number and Operations in Base Ten | 15 | -0.723 | 0.943 | 0.705 | 0.187 | |

| Operations and Algebraic Thinking | 16 | -0.489 | 0.816 | 0.824 | 0.330 | |

| Performance Task | 6 | 0.147 | 0.528 | 0.731 | 0.175 | |

| 5 | Geometry | 13 | 0.029 | 1.473 | 0.438 | 0.140 |

| Measurement and Data | 14 | 0.188 | 0.791 | 0.761 | 0.174 | |

| Number and Operations – Fractions | 15 | -0.019 | 0.994 | 0.746 | 0.327 | |

| Number and Operations in Base Ten | 15 | -0.243 | 0.864 | 0.659 | 0.307 | |

| Operations and Algebraic Thinking | 15 | 0.093 | 1.005 | 0.580 | 0.212 | |

| Performance Task | 6 | 0.710 | 0.698 | 0.841 | 0.292 | |

| 6 | Expressions and Equations | 16 | 0.286 | 1.060 | 0.731 | 0.199 |

| Geometry | 14 | 1.176 | 0.684 | 0.889 | 0.189 | |

| Performance Task | 6 | 1.259 | 0.720 | 0.833 | 0.074 | |

| Ratios and Proportional Relationships | 13 | 0.075 | 0.877 | 0.851 | 0.192 | |

| Statistics and Probability | 13 | 1.368 | 1.579 | 0.434 | 0.186 | |

| The Number System | 15 | 0.352 | 0.589 | 0.678 | 0.222 | |

| 7 | Expressions and Equations | 15 | 1.031 | 1.290 | 0.588 | 0.279 |

| Geometry | 13 | 1.869 | 0.968 | 0.797 | 0.350 | |

| Performance Task | 6 | 1.036 | 0.969 | 0.964 | 0.227 | |

| Ratios and Proportional Relationships | 13 | 1.214 | 0.750 | 0.611 | 0.291 | |

| Statistics and Probability | 15 | 0.955 | 1.436 | 0.558 | 0.296 | |

| The Number System | 14 | 1.000 | 0.978 | 0.603 | 0.233 | |

| 8 | Expressions & Equations I | 14 | 1.260 | 1.561 | 0.508 | 0.212 |

| Expressions & Equations II | 13 | 1.526 | 1.178 | 0.567 | 0.242 | |

| Functions | 15 | 0.867 | 1.087 | 0.593 | 0.214 | |

| Geometry | 14 | 1.564 | 1.065 | 0.514 | 0.181 | |

| Performance Task | 6 | 0.808 | 1.233 | 0.713 | 0.144 | |

| The Number System | 13 | 1.375 | 1.162 | 0.534 | 0.151 | |

| 11 | Algebra and Functions I - Linear Functions, Equations, and Inequalities | 15 | 1.272 | 1.194 | 0.484 | 0.211 |

| Algebra and Functions II - Quadratic Functions, Equations, and Inequalities | 15 | 2.548 | 1.461 | 0.442 | 0.249 | |

| Geometry | 15 | 2.011 | 1.053 | 0.623 | 0.214 | |

| Geometry Congruence | 12 | 2.218 | 1.558 | 0.354 | 0.194 | |

| Geometry Measurement and Modeling | 10 | 3.031 | 1.185 | 0.414 | 0.200 | |

| Interpreting Functions | 14 | 1.648 | 1.533 | 0.560 | 0.300 | |

| Number and Quantity | 15 | 1.050 | 1.600 | 0.428 | 0.196 | |

| Performance Task | 6 | 3.655 | 2.093 | 0.546 | 0.294 | |

| Seeing Structure in Expressions and Polynomial Expressions | 15 | 1.128 | 1.160 | 0.500 | 0.157 | |

| Statistics and Probability | 12 | 2.359 | 3.434 | 0.348 | 0.159 |

| Subject | Grade | N items | Mean b parameter | SD b parameter | Mean a parameter | SD a parameter |

|---|---|---|---|---|---|---|

| ELA | 3 | 48 | -0.682 | 0.979 | 0.707 | 0.235 |

| 4 | 49 | -0.338 | 0.901 | 0.643 | 0.208 | |

| 5 | 48 | 0.005 | 0.942 | 0.652 | 0.218 | |

| 6 | 49 | 0.366 | 1.057 | 0.602 | 0.227 | |

| 7 | 49 | 0.667 | 0.938 | 0.572 | 0.204 | |

| 8 | 50 | 0.508 | 1.086 | 0.632 | 0.244 | |

| 11 | 46 | 0.656 | 1.047 | 0.473 | 0.179 | |

| MATH | 3 | 37 | -1.086 | 0.808 | 0.901 | 0.292 |

| 4 | 36 | -0.459 | 0.770 | 0.835 | 0.251 | |

| 5 | 37 | 0.005 | 0.884 | 0.667 | 0.279 | |

| 6 | 36 | 0.510 | 0.909 | 0.780 | 0.214 | |

| 7 | 37 | 0.603 | 0.865 | 0.693 | 0.316 | |

| 8 | 37 | 0.860 | 0.864 | 0.579 | 0.215 | |

| 11 | 38 | 1.494 | 1.690 | 0.469 | 0.197 |

4.7 Content Alignment

The content alignment studies described in this section were performed using samples of tests delivered in the summative assessments. They are applicable to interim assessments because the interim assessments are created from the same item pool as the summative assessments, and their blueprints use the same content categories (e.g., claims, targets, and DOK) as summative assessments.

Content alignment addresses how well individual test items, test blueprints, and the tests themselves represent the intended construct and support appropriate inferences. With a computer adaptive test, a student’s test form is a sampling of items drawn from a much larger universe of possible items and tasks. The sampling is guided by a blueprint. Alignment studies investigate how well individual tests cover the intended breadth and depth of the underlying content standards. For inferences from test results to be justifiable, the sample of items in each student’s test has to be an adequate representation of the broad domain, providing strong evidence to support claims being made from the test results.

Four alignment studies have been conducted to examine the alignment between Smarter Balanced tests and the CCSS. The Human Resources Research Organization conducted the first alignment study (HumRRO, 2016a). HumRRO’s comprehensive study centered around the assumptions of evidence-centered design (ECD), which examined the connections in the evidentiary chain underlying the development of the Smarter Balanced foundational documents (test blueprints, content specifications, and item/task specifications) and the resulting summative assessments. Among those connections was the alignment between the Smarter Balanced content specifications, the alignment between the Smarter Balanced evidence statements and content specifications, and the alignment between the Smarter Balanced blueprint and the content specifications. Results from this study were favorable in terms of the intended breadth and depth of the alignment for each connection in the evidentiary chain.

In 2016, the Fordham Institute (2016) and HumRRO (2016b) investigated the quality of the Smarter Balanced assessments relative to CCSSO (Council of Chief State School Officers) criteria for evaluating high-quality assessments. In particular, the Smarter Balanced assessments were investigated to see if they place strong emphasis on the most important content for college and career readiness, as called for by the CCSS, and if they require that students demonstrate the range of thinking skills called for by those standards.

- Fordham Institute reviewed grades 5 and 8 ELA and mathematics, and HumRRO reviewed high school ELA and mathematics.

- Fordham Institute rated Smarter Balanced grades 5 and 8 mathematics assessments as a good match to the CCSSO criteria for content, and a good match to the CCSSO criteria for depth.

- HumRRO rated the Smarter Balanced high school ELA assessments an excellent match to the CCSSO criteria for content, and a good to excellent match for depth.

- HumRRO rated the Smarter Balanced high school mathematics assessments a good to excellent match to the CCSSO criteria for content, and a good to excellent match for depth.

An additional external alignment study, completed by WestEd (2017), employed a modified Webb alignment methodology to examine the summative assessments for grades 3, 4, 6, and 7 using sample test events built using 2015–16 operational data. The WestEd alignment study provided evidence that the items within ELA/literacy and mathematics test events for grades 3, 4, 6, and 7 were well aligned to the CCSS in terms of both content and cognitive complexity.